A variety of digital tools are being used to monitor workers across various industries, some of which use artificial intelligence (AI) to try to gain insights into workers’ performance. These tools can track what workers do on their computers, how they are driving on the job, their movements within the workplace and even their tone of voice when speaking with customers.

In the new Pew Research Center survey, most Americans report unease or uncertainty when contemplating six potential uses of AI by employers to monitor workers. For example, majorities oppose tracking workers’ movements while they work or keeping track of when office workers are at their desks. Majorities also expect that if employers used AI to monitor and evaluate workers, it would lead to employees feeling like they are being inappropriately watched or that the information collected about workers would be misused. Further, Americans express discomfort with AI being used by employers to help make promotion and termination decisions.

That is not to say that Americans don’t think there is potential for improvement. A majority of Americans feel that bias and unfair treatment in performance evaluations due to workers’ race and ethnicity is a problem. And of those who see it as a problem, more believe AI may be able to help rather than hurt in addressing these bias issues – but a notable share thinks AI wouldn’t make much of a difference.

Similar to AI use in hiring, Americans report a general lack of familiarity with AI’s use by employers to monitor how workers are doing their jobs. Some 37% say they have heard or read about employers using AI to collect and analyze information about how workers are doing their jobs, with only 6% having heard or read a lot. A majority (62%) report no familiarity with the topic at all. In fact, roughly half or more of each demographic group analyzed report having heard or read nothing at all about employers’ use of AI to collect and analyze information about how workers are doing their jobs. (For individual demographic groups’ views on this question, see Appendix B.)

Americans have a range of views about AI being used to monitor workers

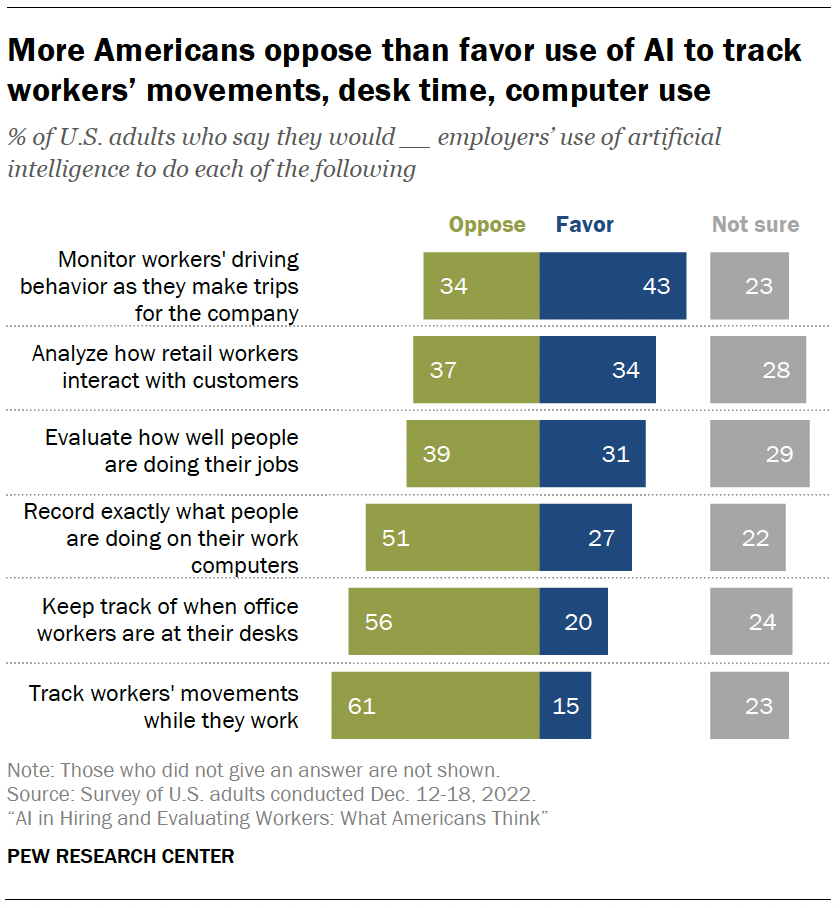

Americans hold varied views about the six different applications of AI for workplace monitoring that are explored in this survey. The public is most accepting of the idea that AI be used by employers to monitor workers’ driving behavior as they make trips for their organization.

Some advocates say AI-based monitoring of drivers is a way to improve driver safety. And some companies have already deployed AI solutions to monitor the driving behavior of their delivery drivers and long-haul truckers. Americans favor using AI to monitor drivers by 43% to 34%.

The public is more divided when it comes to employers using AI to analyze retail workers’ interactions with customers – 34% favor AI being used in such a way while 37% oppose it. This split comes as AI systems are being used at some firms to monitor (and even influence) workers’ interactions with customers.

Pluralities report opposing the use of AI to evaluate how well people are doing their jobs (39%) or recording exactly what people are doing on their work computers (51%). In addition, majorities of Americans oppose AI tracking workers’ movements (61%) or keeping track of when office workers are at their desks (56%). These AI solutions are often promoted as a way to better assess employee productivity.

A notable share of the public is uncertain about the use of AI monitoring practices in the workplace. For each of these uses, between 22% and 29% of Americans report being unsure of whether they would favor or oppose employers using AI in that way.

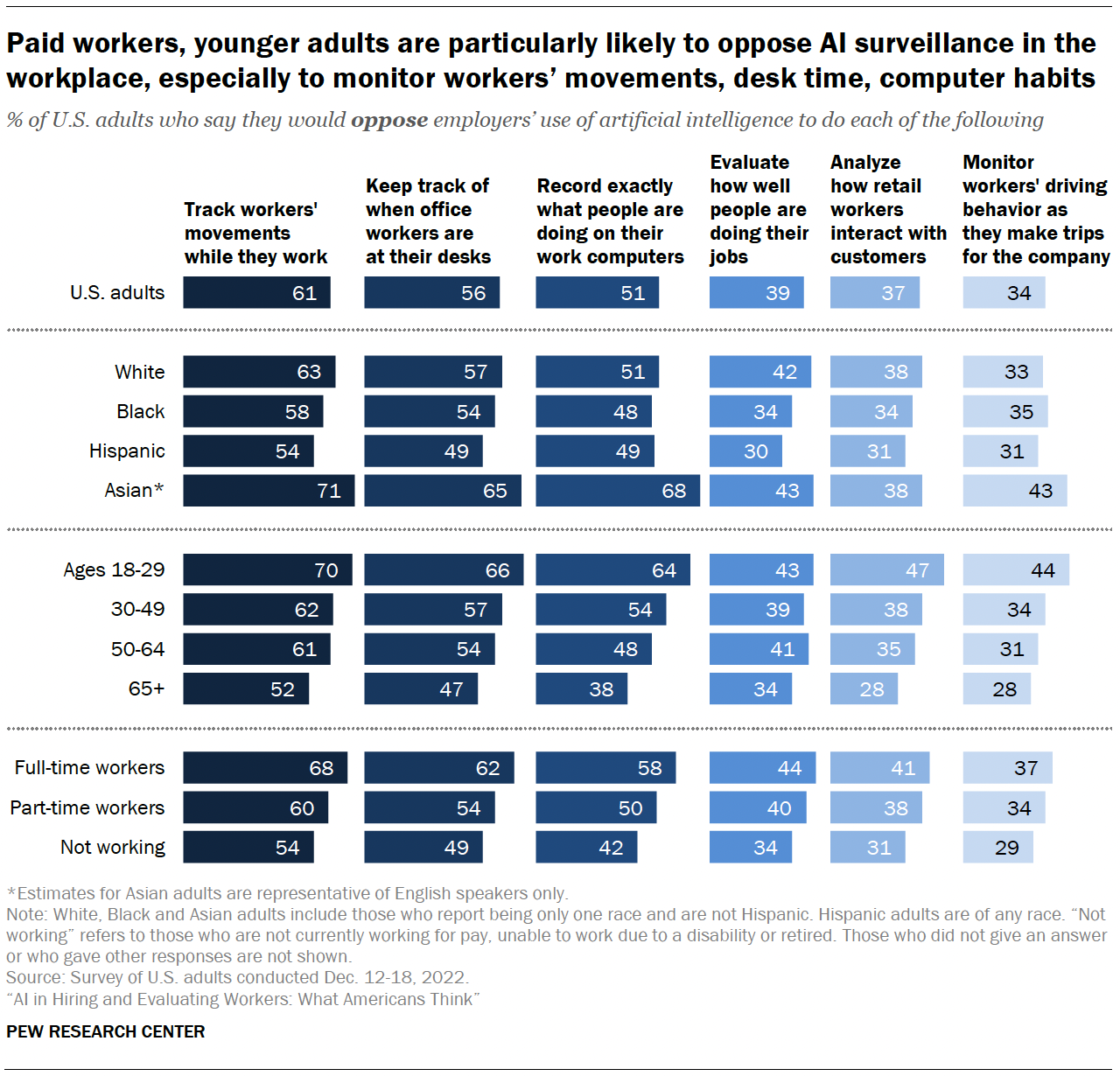

Opposition to types of AI monitoring in the workplace varies across demographic groups. For example, adults under the age of 65 are consistently more likely to oppose each of the six types of AI surveillance at work than those 65 and older. And aside from performance evaluations, adults under 30 stand out from those 30 and older in their opposition to AI surveillance.

When looking at opinions by employment status, paid workers (i.e., full- and part-time workers) are more likely than those not currently working for pay (whether retired, unemployed or unable to work) to oppose each use of AI monitoring in the workplace. Full-time workers are also more likely than part-time workers to say they oppose employers using AI to track workers’ movements while they work, keep track of when office workers are at their desks or record exactly what people are doing on their work computers. For example, 68% of full-time workers oppose employers’ using AI to track workers movements, compared with 60% of part-time workers and 54% of those not currently working.

Asian adults stand out for their opposition to several types of AI monitoring in the workplace. Asian adults are more likely than other racial or ethnic groups to oppose AI being used to track worker movements, desk time and computer habits. Specifically, 71% of Asian adults oppose tracking workers’ movements, compared with about six-in-ten White adults (63%), who in turn are more likely to oppose tracking workers than Black or Hispanic adults (58% and 54%). A somewhat similar pattern is seen for racial differences in opposition to employers using AI to track when office workers are at their desk. In addition, 68% of Asian adults oppose AI being used to record what people do on their work computers, while about half of White (51%), Hispanic (49%) and Black adults (48%) say the same. Racial differences on the other items are less pronounced.

Adults are more likely to think worrisome things will happen, rather than beneficial things, if AI systems are used to evaluate workers

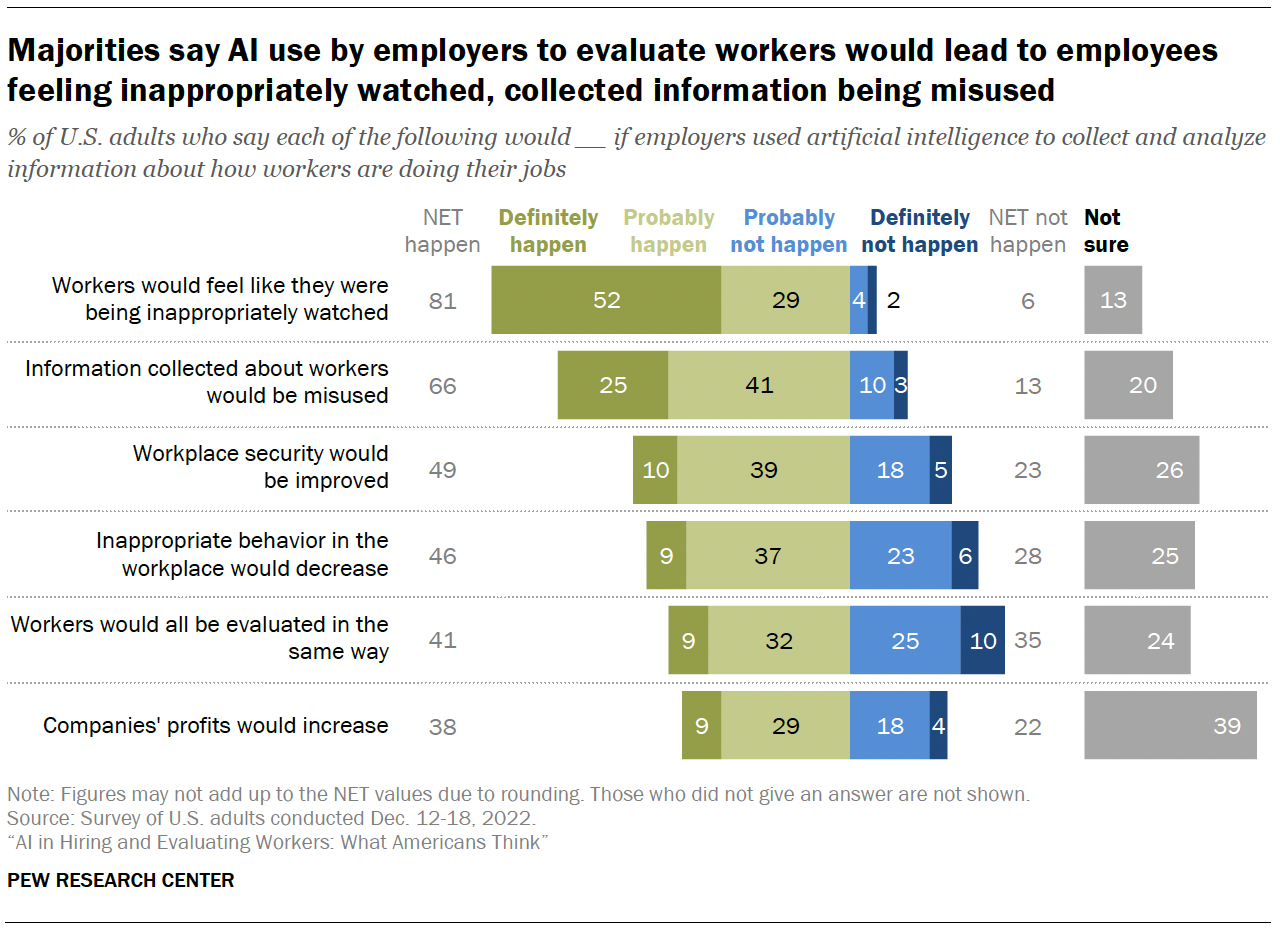

Beyond favoring or opposing AI being used to monitor how workers are doing their jobs, Americans foresee a greater chance of potential downsides than upsides to AI’s use in workplace settings. If AI were used to collect and analyze information about how workers are doing their jobs, about eight-in ten say workers would definitely (52%) or probably (29%) feel like they were being inappropriately watched. A majority also agrees this would lead to the information collected about workers being misused (66%).

Smaller shares say potentially beneficial outcomes would also likely occur. About half say security in the workplace would probably or definitely improve, and 46% say inappropriate behavior in the workplace would likely decrease. Additionally, 41% say workers would all be evaluated in the same way.

Still, about a third of the public (35%) does not think using AI would lead to equitable evaluations, and 28% think it would be ineffective at curbing inappropriate behavior.

Americans express the greatest uncertainty when it comes to how this AI use would affect companies’ bottom lines. About four-in-ten U.S. adults say they are not sure how companies’ profits would be affected, while a similar share say they think profits would probably or definitely increase.

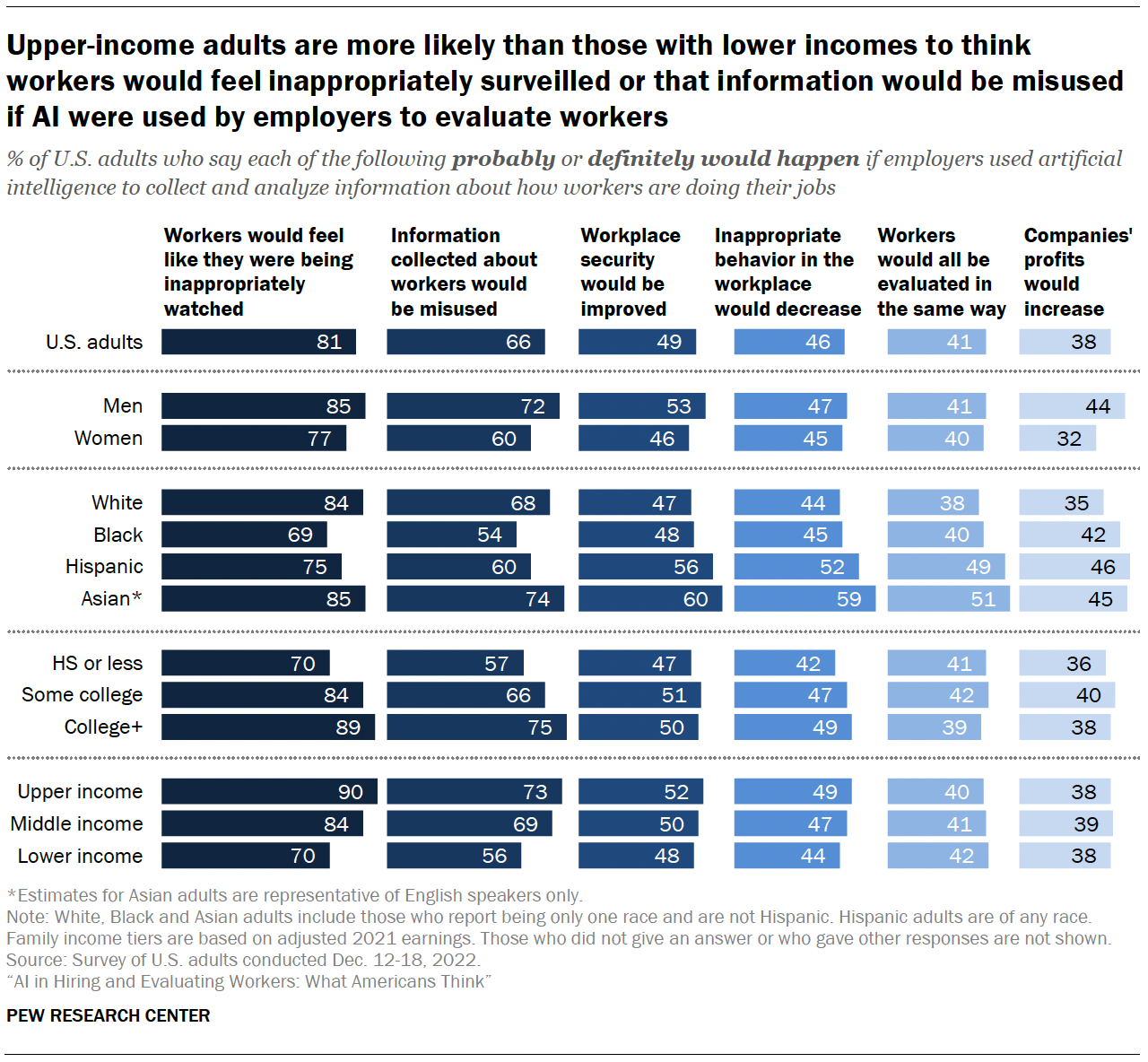

Racial and ethnic differences emerge for each of the six possible outcomes asked in the survey. White and Asian adults are more likely to see potential downsides for workers if AI was used to monitor them. They foresee workers feeling inappropriately watched or the information collected from this surveillance being misused. Smaller shares (albeit still majorities) of Hispanic adults share this view, and they are more likely than Black adults to think these things would happen.

Conversely, Asian and Hispanic adults are more likely than their White or Black counterparts to think AI being used to monitor workers would lead to improved security, fewer inappropriate behaviors and equal treatment for all workers.

When it comes to AI’s possible effects on the bottom line, Black, Hispanic and Asian adults are more likely to think company profits would go up with AI monitoring than White adults.

Men are more likely than women to see certain impacts if employers use AI systems in the workplace. Larger shares of men than women report feeling that if AI were used in this way, workers would feel inappropriately watched and information collected would be misused. However, they also are more likely to think workplace security would be improved and profits would go up. Similar shares of both men and women think use of AI in the workplace would lead to equitable treatment of all workers during evaluations (41% and 40%, respectively) and less inappropriate behavior (47% and 45%).

One other group difference: Nine-in-ten upper-income adults say workers would probably or definitely feel inappropriately surveilled if AI were used to collect and analyze information about how workers are doing their jobs. By comparison, 84% of adults in middle-income households followed by 70% of those in lower-income families say the same. A similar pattern of opinions is seen regarding misuse of information collected by AI, with greater levels of income being associated with thinking this would likely be the case.

Relatively few Americans think information gathered from AI monitoring in the workplace should be used to decide who gets promoted or fired

AI monitoring tools have made news in the past as companies have used these systems to make termination decisions. Conversely, AI has also been used to evaluate workers’ future potential.

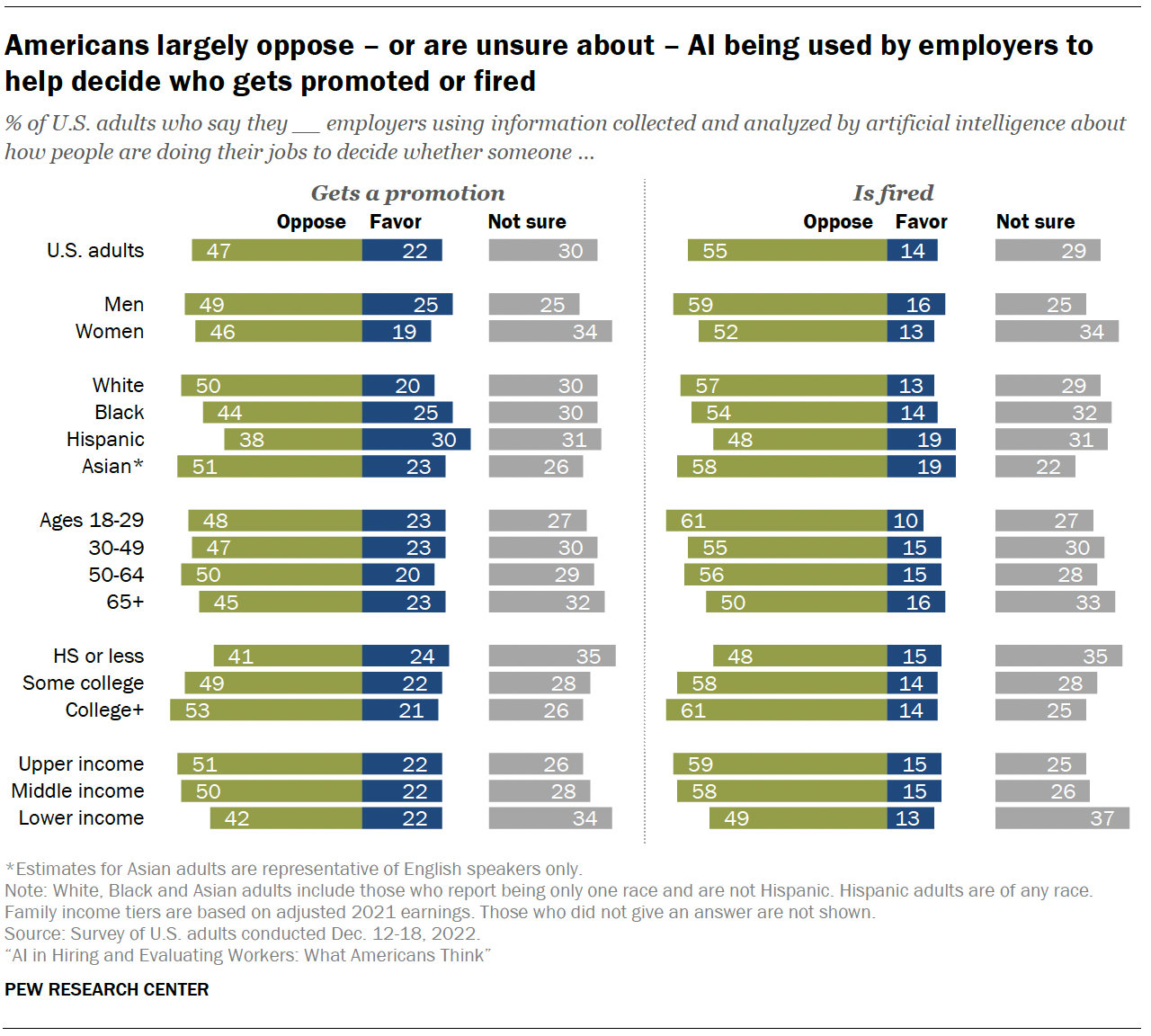

Many Americans are hesitant to let AI be used in decision-making about worker terminations or promotions. Some 55% say they oppose employers using information collected and analyzed by AI about how people are doing their jobs to decide whether someone is fired, and 47% report opposing AI being used in this way to decide if someone gets a promotion.

While Americans are somewhat more open to the use of AI surveillance to aid in promotion decisions than terminations, few say they favor AI involvement in determining promotions (22%) or terminations (14%). There is also uncertainty about using AI to inform promotions and termination decisions. About three-in-ten say they are not sure how they feel about AI being used for each of these options (30% and 29%, respectively).

In all, about half of adults or more across major demographic groups oppose the use of AI in firing decisions, and pluralities across these groups oppose it being used for promotion decisions.

While few differences in favoring AI’s use emerge, there are demographic differences in the level of opposition and uncertainty expressed by these groups. For example, compared with Hispanic adults, larger shares of White and Asian adults oppose the use of AI for both promotion and termination decisions. While White adults are more likely than Black adults to oppose AI being used for promotions, the share of Black adults who oppose it being used in this way exceeds the share of Hispanic adults who say this. Black adults do not differ from any of the other three groups in opposing AI use in firing someone.

Adults with middle and upper household incomes tend to oppose employers using AI systems to make decisions about whom to promote or fire at higher rates than those with lower incomes. Income is also related to expressing uncertainty, with adults in lower-income households more inclined than their more affluent counterparts to say they are unsure of whether they favor or oppose AI’s use in these decisions.

Similarly, women are more likely than men to express uncertainty about both of these possible uses.

Majority of Americans say racial, ethnic bias is a problem in performance evaluations; nearly half who say this think AI can help

Racial biases in the workplace are a well-researched phenomenon. And these biases can lead to discrepancies in how workers are evaluated, compensated and promoted.

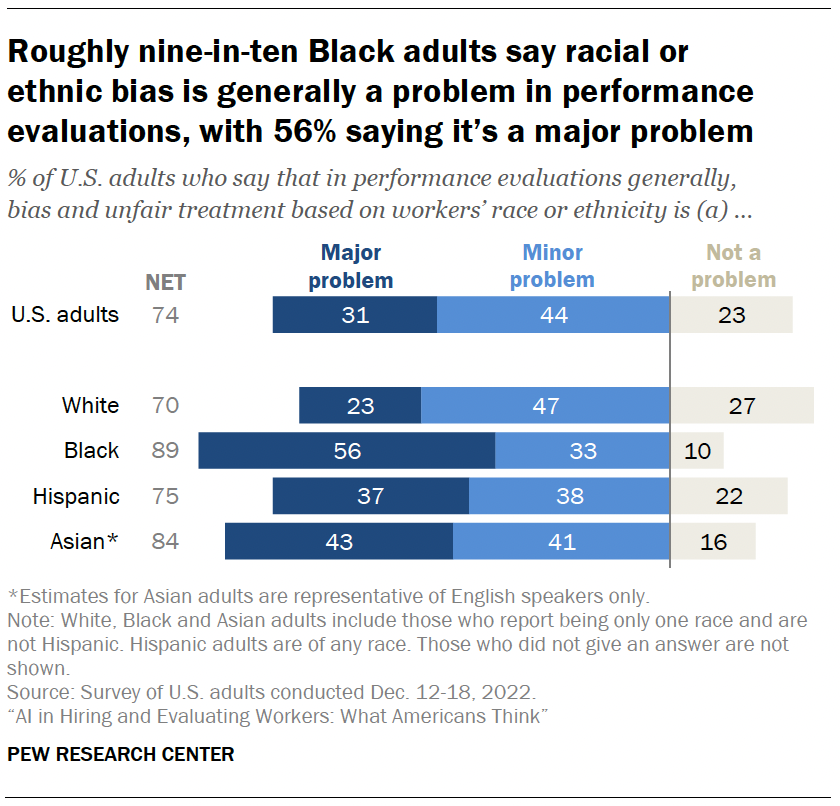

Some 74% of U.S. adults say bias and unfair treatment based on workers’ race or ethnicity is a problem in performance evaluations, with 31% saying this is a major problem. Some 23% say this is not an issue.

Majorities across demographic groups believe that racial or ethnic bias is a problem in worker evaluations. But Black adults stand out for thinking this is a major issue: Some 56% say racial or ethnic bias is a major problem in worker evaluations, while about four-in-ten Asian or Hispanic adults and 23% of White adults say the same.

Experts debate whether AI might curb or exacerbate racial discrimination in the workplace. Those skeptical of AI systems’ capacity to root out bias make the case that AI may not be human, but that does not mean that AI is fully immune to human bias. AI is designed by humans and may replicate existing racially biased practices. At the same time, proponents contend it can be easier to address bias in AI than in humans. As such, some AI advocates have argued that AI could be a potential solution for addressing bias in workplace practices.

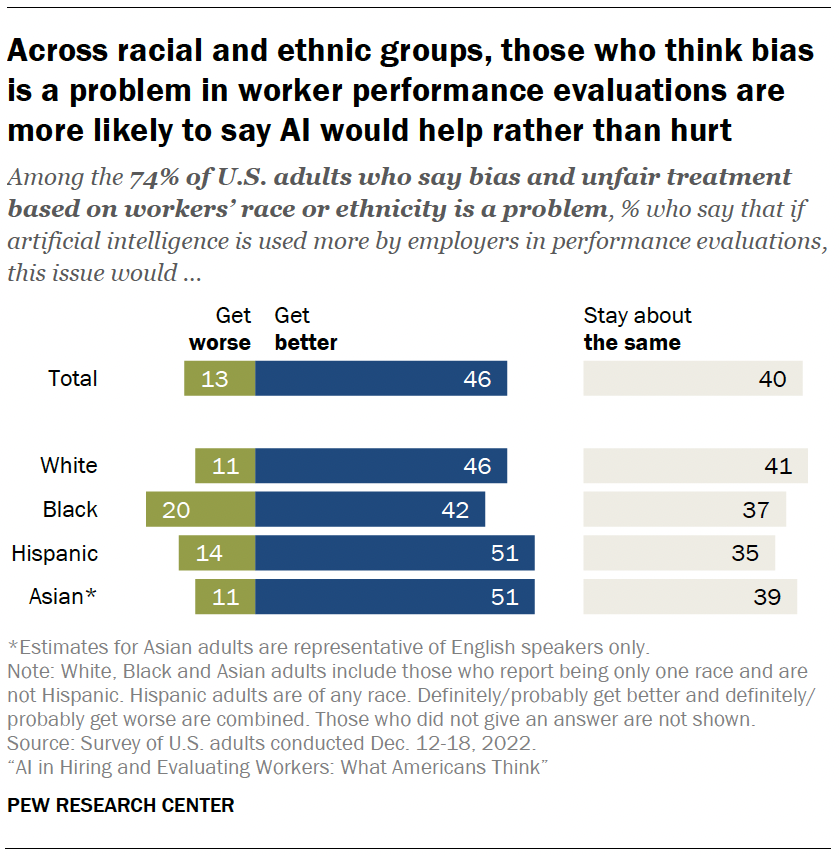

In this survey, those who think racial and ethnic bias is problem were asked a follow-up question about whether they think the use of AI in worker evaluations would make things better or worse. Some 46% of adults who say bias and unfair treatment based on race or ethnicity is a problem in evaluations feel that AI might be able to help address this issue, while 13% feel AI may make things worse and 40% say AI’s involvement would not affect racial or ethnic bias in performance evaluations. (Overall, this means that about one-third of all U.S. adults say it’s a problem and that it would get better with AI; 10% say it’s a problem and would get worse; and 29% say it’s a problem that would stay about the same.)

Across racial and ethnic groups, AI is predicted to benefit the evaluation process rather than be a detriment to it. Those who foresee benefits exceed those who anticipate detriments by notable margins. And Black adults who say racial and ethnic bias is a problem in evaluations are more likely than other racial or ethnic groups to think AI would make things worse.

Among those who say bias and unfair treatment based on workers’ race or ethnicity is a problem in performance evaluations, people who favor AI’s use in promotions or terminations are more likely than those who oppose each to say AI could help curb racial and ethnic biases and mistreatment. For example, seven-in-ten who favor AI’s use for promotion decisions think AI would help curb racial bias in performance evaluations, while about half as many who oppose AI’s use for promotions agree (34%). A similar pattern is seen between those who favor versus oppose AI’s use in terminations (72% vs. 38%).