About seven-in-ten Americans use social media to connect with others, share aspects of their lives and consume information. The connections and content they encounter on these sites are shaped not just by their own decisions, but also by the algorithms and artificial intelligence technologies that govern many aspects of these online environments. Social media companies use algorithms for a variety of functions on their platforms, including to decide and structure what flow of content users see; figure out what ads a user will like; make recommendations for content users might like; and assist with content moderation like detecting and removing hate speech.

The companies also use these algorithms to scale up efforts to identify false information on their sites – recognizing the pressing challenge of halting the spread of misinformation on their platforms, but also faced with vast amounts of content and the constant emergence of new false claims. While a variety of approaches can be used to find content that does not pass fact-checking standards and predict similar posts, the challenges of modern content moderation often require more efficient and scalable approaches than human review alone.

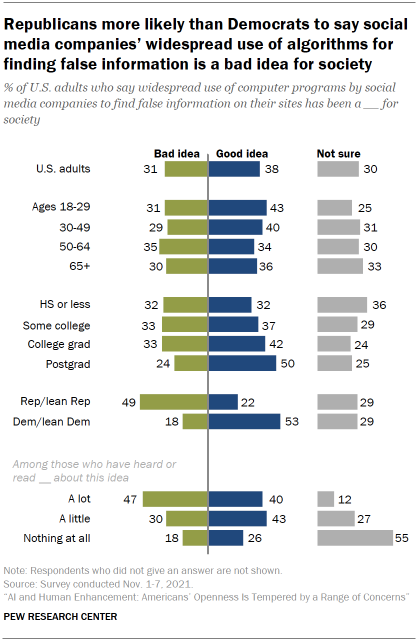

Pew Research Center’s November survey reveals a public relatively split when it comes to whether algorithms for finding false information on these platforms are good or bad for society at large – and similarly mixed views on these algorithms’ performance and impact. It also finds Republicans particularly opposed to such algorithms, echoing partisan divides in other Center research related to technology and online discourse – from the seriousness of offensive content online to whether tech companies should take steps to restrict false information online in the first place.

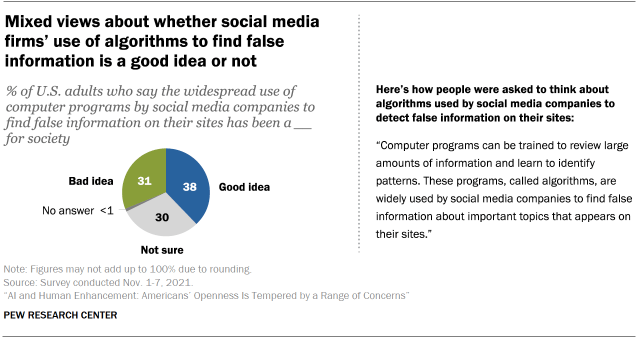

Asked about the widespread use of these computer programs by social media companies to find false information on their sites, 38% of U.S. adults think this has been a good idea for society. But 31% say this has been a bad idea, and a similar share say they are not sure.

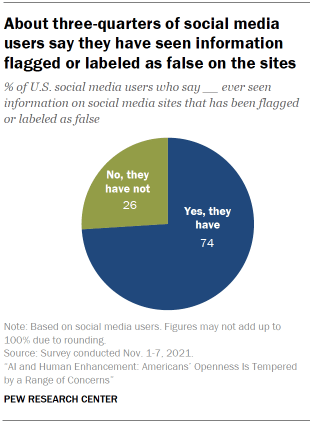

Companies have taken action on posts they determine contain falsehoods, including adding fact-check labels to misinformation relating to the 2020 presidential election and the coronavirus. Many people say they have seen these downstream impacts of algorithms’ work: About three-quarters of social media users (74%) say they have ever seen information on social media sites that has been flagged or labeled as false.

And three-quarters of adults say they have heard or read at least a little about computer programs used by social media companies to detect misinformation, including 24% who have heard a lot. Yet another 24% say they have heard nothing at all about these issues.

Republicans especially likely to think social media companies’ use of false information-detecting algorithms negatively impacts online environment

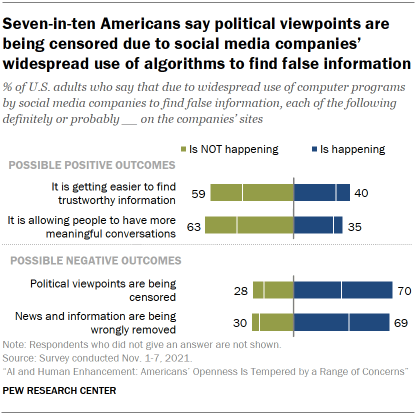

Overall, majorities of Americans believe that the algorithms companies use to find false information are not helping the social media information environment and at times might be worsening it. And even with this across-the-aisle agreement, there are stark partisan differences on the four potential impacts the survey explored – two that are positive in nature and two that are negative.

On the negative end, seven-in-ten adults say political viewpoints are definitely or probably being censored on social media sites due to the widespread use of algorithms to detect false information, and a similar share (69%) says that news and information are definitely or probably being wrongly removed from the sites.

Far smaller shares say the widespread use of such algorithms are leading to the two positive outcomes the survey explored. In fact, about six-in-ten (63%) say that their use is not allowing people to have more meaningful conversations on the platforms, and a similar share says it is not making it easier to find trustworthy information.

Those who are most familiar with these algorithms are more likely than those who are least familiar to think they have negative impacts. For example, three-quarters of those who say they have heard or read a lot about them say news and information is being wrongly removed, while six-in-ten of those who have heard nothing at all say this.

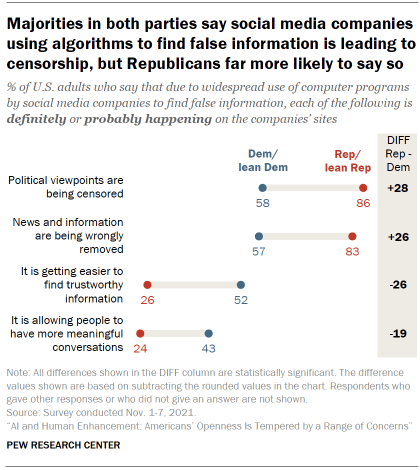

Views also vary dramatically by partisanship. While majorities in both major political parties say political censorship and wrongful removal of information are definitely or probably happening as a result of the widespread use of these algorithms, Republicans and those who lean toward the GOP are far more likely than Democrats and leaners to say so, with differences of 28 percentage points on political censorship and 26 points on wrongful removal. This pattern appears in other Center research. For example, even as most Americans said in 2020 that social media companies likely censor political viewpoints, Republicans were especially likely to say so.

At the same time, Democrats are more likely than Republicans to say the positive impacts the survey explored are realities. Democrats are twice as likely to say it is getting easier to find trustworthy information on social media sites due to widespread use of algorithms to find false information, and the share of Democrats who say that this is allowing people to have more meaningful conversations is 19 points higher than among their GOP counterparts.

Political party and awareness also color Americans’ views when asked about the broader impact of these algorithms on society. The share of Republicans who say these programs have been a bad idea for society is about 30 points higher than the parallel share of Democrats.

These findings echo double-digit partisan divides found when asking about the role of social media and the companies that run these sites in society more generally. For example, a 2020 Center study found Republicans more likely than Democrats to say technology companies have too much power in the economy, even as majorities across parties said so. In a separate 2020 survey, those who identified with the GOP were also more likely to think social media have a mostly negative impact on the way things are going in the country.

The balance of views also shifts by how much people have heard about the topic. Views lean negative among those who have heard a lot, with about half saying widespread use of these algorithms is a bad idea. Compared with this group, those who have heard a little are less skeptical – a smaller share of them say this is a bad idea, but at the same time they are more likely to express uncertainty. And among those who have heard nothing at all, over half say they are not sure whether it’s a good or bad idea for society. When it comes to formal educational attainment, those with postgraduate degrees stand out in their views – half say these algorithms are a good idea for society.

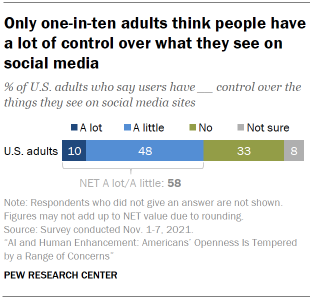

People’s perceptions of the social media experience may factor into their views about societal impact as well. For example, a majority of Americans say people have at least a little control over the things they see on social media sites, but just one-in-ten say people have a lot of control. And a third of adults say users have no control at all. Previous Center work has also found this perceived lack of user control in other social media contexts – from the mix of news people see to what appears in their feeds.

Those who think users have no control over the social media content they see are particularly likely to say algorithms for detecting false information are a bad idea for society. Some 41% of this group say social media companies’ widespread use of such programs is a bad idea – about twice as high as the share who say so (20%) among those who think users have a lot of control and 11 points higher compared with those who say users have a little control (30% of this group say use of the programs is a bad idea for society).

Majorities say decisions about false information on social media should be made with some human input and algorithms should favor accuracy over speed

Even as social media companies work to improve the accuracy, clarity and efficiency of their algorithms, some experts say the programs are vulnerable to mistakes and bias. Others argue that even social media firms do not fully understand what their algorithms do. Yet the alternative of human reviewers poses challenges as well – from the sheer volume of posts to the potential harm to the reviewers themselves.

This survey probed three key tensions that relate to the quality of the decisions made by these algorithms: How Americans perceive algorithmic decisions compared with decisions made by people, how important it is to include diverse perspectives in the creation of algorithms and whether they should prioritize speed or accuracy.

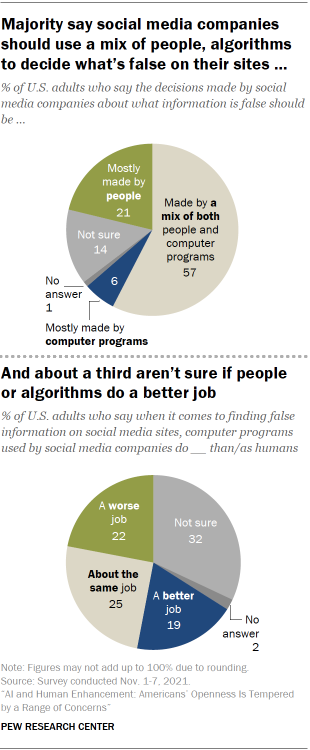

A majority of Americans say decisions about what is false on social media should be made with some human input – that is, by a mix of both people and computer programs. About a fifth say they should be mostly made by people, and just 6% say they should be mostly made by computer programs.

However, people are split when asked whether computers or humans do a better job finding false information. The largest share say they are not sure; another quarter say they do about the same job, while about one-in-five each say computers do a worse job (22%) or a better job (19%).

Views on relative performance vary by awareness of these algorithms – nearly half (45%) of those who have seen or heard nothing say they are not sure what does a better job, compared with 30% among those who have heard a little and 22% of those who have heard a lot. At the same time, one-third of those who have heard a lot say computer programs do a worse job than humans, versus 21% of those who have heard a little and 14% of those who have heard nothing.

Human judgment can make its way into assessments about what information is false on social media via fact-checker judgments, crowdsourced labeling of false information and review processes in place for contested decisions or to judge context. Even when decisions are made primarily by algorithms, various steps in creating these programs – including using fact-checker judgments to “train” computer programs – can introduce human influence into the process.

The resulting potential for algorithms to also codify bias has been increasingly in the public eye. For example, recently released documents describe concerns with programs designed to detect hate speech failing to protect Black people from harassment, and Black social media users have expressed frustration over content being flagged as inappropriate, mistakenly or intentionally. Other investigations have focused on whether algorithms promote some political viewpoints over others amid widely perceived censorship by social media companies. When it comes to the public’s views, a majority of Americans said in 2018 that computer programs always reflect the biases of their creators, though 40% thought it possible to make decisions free from human bias.

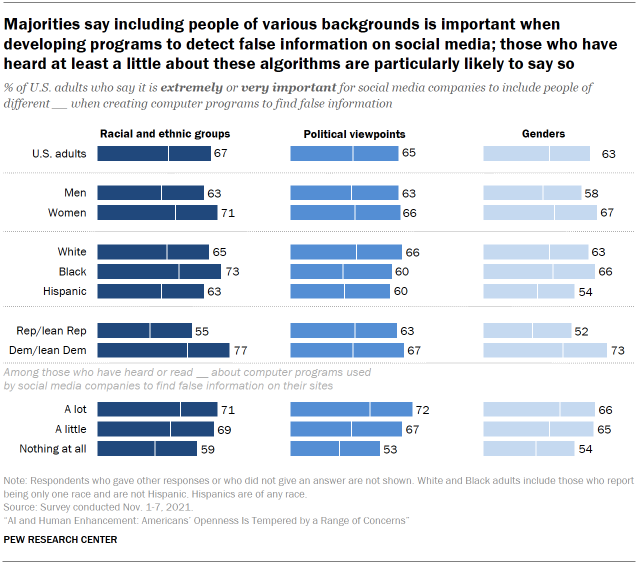

Some have pointed to the lack of meaningful diversity in the technology companies that create and use these programs as one contributing factor. When asked about who companies should include at the algorithmic design stage, notable shares of Americans say including members of a range of groups is important. About six-in-ten or more say it is extremely or very important that social media companies include people of different racial and ethnic groups (67%), political viewpoints (65%) and genders (63%) when creating computer programs to find false information. In each case, about four-in-ten Americans say it is extremely important to include these groups.

Those who have heard at least a little about the use of computer programs by social media companies to find false information on their sites place more importance on diversity in algorithmic design than do those who have heard nothing at all. And partisans differ dramatically in how important they view including people of different racial, ethnic and gender groups to be, with Democrats being more likely than Republicans to say these things are extremely or very important.

Women are more likely than men to say that including people of different genders is extremely or very important (67% vs. 58%), and Black adults (73%) are more likely than White (65%) or Hispanic adults (63%) to say the same when it comes to people of different racial and ethnic groups. There is little difference by political party in the importance placed on diversity of political viewpoints at the creation stage of these algorithms.

(The survey also sought Americans’ opinions about whether the experiences and views of various groups are taken into account when artificial intelligence programs are created; these varying perspectives are covered in Chapter 1.)

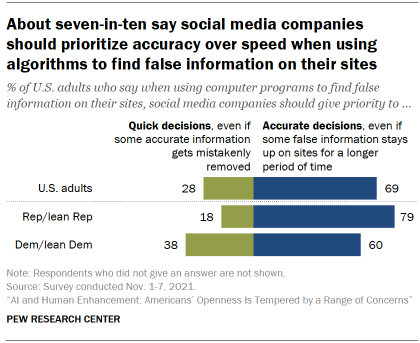

Finally, the survey explored Americans’ views of the tension between the speed with which decisions can be made versus the accuracy of these decisions. Fully 69% of Americans say social media companies should prioritize accurate decisions, even if some false information stays up on sites for a longer period of time – while 28% say they should give priority to quick decisions, even if some accurate information gets mistakenly removed.

Compared with accuracy, relatively small shares in both parties say speed should be prioritized; however, the share of Democrats who say so is 20 points higher than the share of Republicans who say the same (38% vs. 18%). Women are also more likely than men to say speed should be the priority (32% vs. 24%), as are Black (41%) or Hispanic (32%) adults compared with White adults (24%).

53% worry government will not go far enough in regulating social media companies’ algorithms for finding false information

Whistleblower testimony has reignited debate about regulating the algorithms social media companies use. At the same time, federal agencies are pushing social media companies to disclose more about the data they collect and how their algorithms work. While some have called for more regulation of algorithms generally, there is still debate about how this should be accomplished – in part because of internet and free speech issues that could eventually end up in the courts.

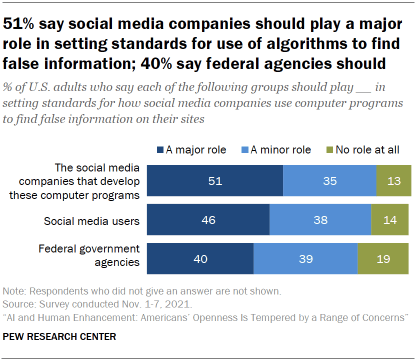

This survey asked Americans about the role they think three key groups should play in setting standards for algorithms used by social media companies. Some 51% say the social media companies that develop these computer programs should play a major role in setting standards for the use of algorithms for finding false information. Nearly half (46%) say social media users should play a major role in setting these standards. And four-in-ten say the same about federal government agencies.

Democrats are far more likely than Republicans to say federal government agencies should play a major role in setting these standards (51% vs. 27%). They are also more likely to say that the social media companies themselves should play a major role (58% vs. 42%).

Other Center research shows that over the past several years Americans have grown slightly more open to the general idea of the U.S. government taking steps to restrict false information online. About half said in 2021 that the government should do this, even if it limits freedom of information, with Democrats far more likely to say this than Republicans.

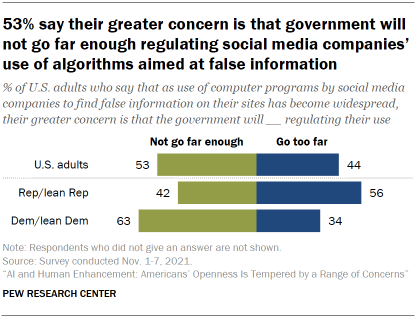

When asked what their greater concern is in terms of regulating social media companies’ use of these algorithms, 53% of Americans say it is that the government will not go far enough – while 44% are more worried the government will go too far. These views again vary by party, with Democrats more likely than Republicans to be concerned that the government will not go far enough.

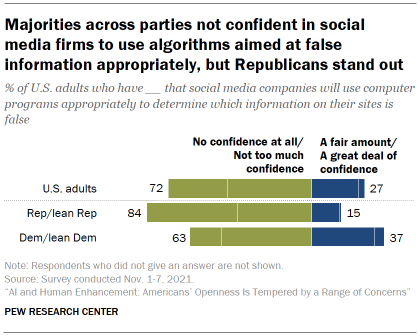

Americans also do not have much confidence in social media companies’ appropriate use of these algorithms. Fully 72% of Americans have little or no confidence that social media companies will use computer programs appropriately to determine which information on their sites is false, including three-in-ten who have no confidence at all. On the other hand, just 3% of Americans have a great deal of confidence that social media companies will do this.

Partisans diverge dramatically in these respects. Majorities across both parties are not confident, but Republicans are much more likely to have little or no confidence than Democrats – a difference of 21 percentage points.

This pattern also appears in previous Center work on Americans’ confidence in social media companies to determine which posts should be labeled as inaccurate or misleading in the first place – algorithmically or not. Republicans stood out in their lack of confidence, according to the June 2020 survey.

Majorities of Americans oppose use of algorithms for final say over mortgages, jobs, parole, medical treatments

The basic principles behind the algorithms that social media companies use to detect certain types of content on their sites are also used in other contexts throughout society – sometimes with far-reaching implications that can affect people’s lives and livelihoods.

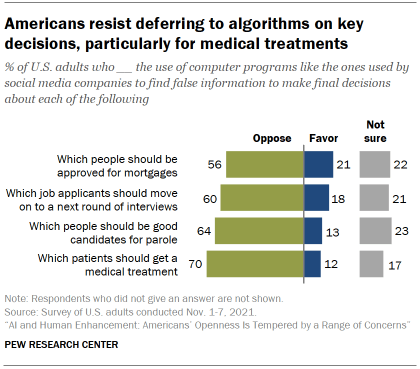

Majorities of Americans oppose the use of algorithms to make final decisions about which patients should get a medical treatment (70%), which people should be good candidates for parole (64%), which job applicants should move on to a next round of interviews (60%) or which people should be approved for mortgages (56%). About one-in-five or fewer favor each of these ideas. And roughly a quarter or fewer say they are not sure.

Proponents of algorithms sometimes make the case that automated systems can reduce discrimination. But the issue has been debated widely, especially when it comes to race and ethnicity, with others saying algorithms themselves can be inherently discriminatory in settings from the criminal justice system to the job market.

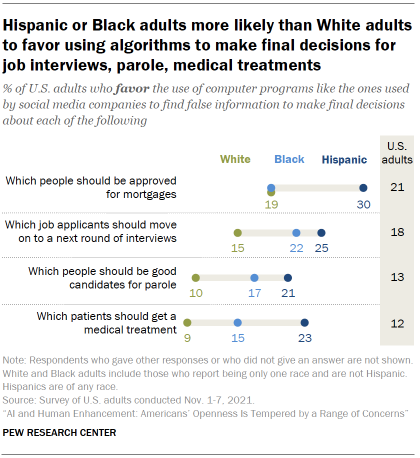

While small shares of adults across demographic groups favor computer programs making the final decisions in each case, there are some modest differences by race and ethnicity.

Hispanic or Black adults are more likely than their White counterparts to favor algorithmic final decisions in three of these contexts –medical treatments, job interviews and parole. Hispanic adults are also more likely than either Black or White adults to say this about mortgages.