In public opinion surveys, the way questions are asked can influence the answers people give. To better understand this phenomenon, pollsters sometimes measure the degree to which different questions elicit different responses. One way they do this is through survey experiments.

In general, survey experiments involve changing some aspect of the survey experience for some respondents. While one person might see a question that uses one word or phrase, for example, another might see a question that uses a slightly different word or phrase.

The results of survey experiments provide two kinds of information for researchers. First, they help measure opinion with more nuance: By changing aspects of a survey question, researchers can measure relative differences in opinion. Second, they can help researchers design better questions by showing whether answers change if different words are used. If researchers see no major differences, they can be more confident that both kinds of questions capture the same underlying opinion.

A key to conducting survey experiments is that participants are randomly assigned to different variations of a survey; the version they see is not based on their previous answers or any other personal characteristics. This way, we can generally be confident that any differences in answers across groups of respondents are not based on each group’s particular attributes.

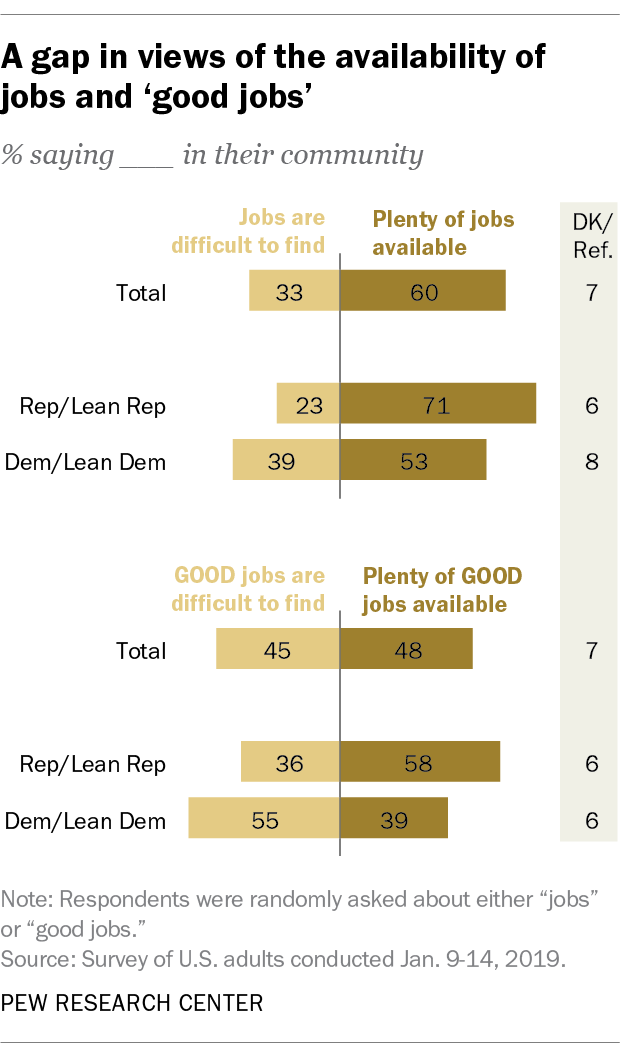

In a January 2019 survey, Pew Research Center asked respondents about the availability of jobs in their area. In that survey, researchers randomly assigned half of respondents to receive a version of the question that asked about “good” jobs while the other half were asked a version that didn’t include this positive qualifier.

The experiment found that respondents were more likely to say plenty of “jobs” were available in their community than they were to say plenty of “good jobs” were available (60% vs. 48%). While there are partisan differences in these views (Republicans had more positive views of job availability), both Republicans and Democrats are significantly more likely to say “jobs” are available than to say “good jobs” are.

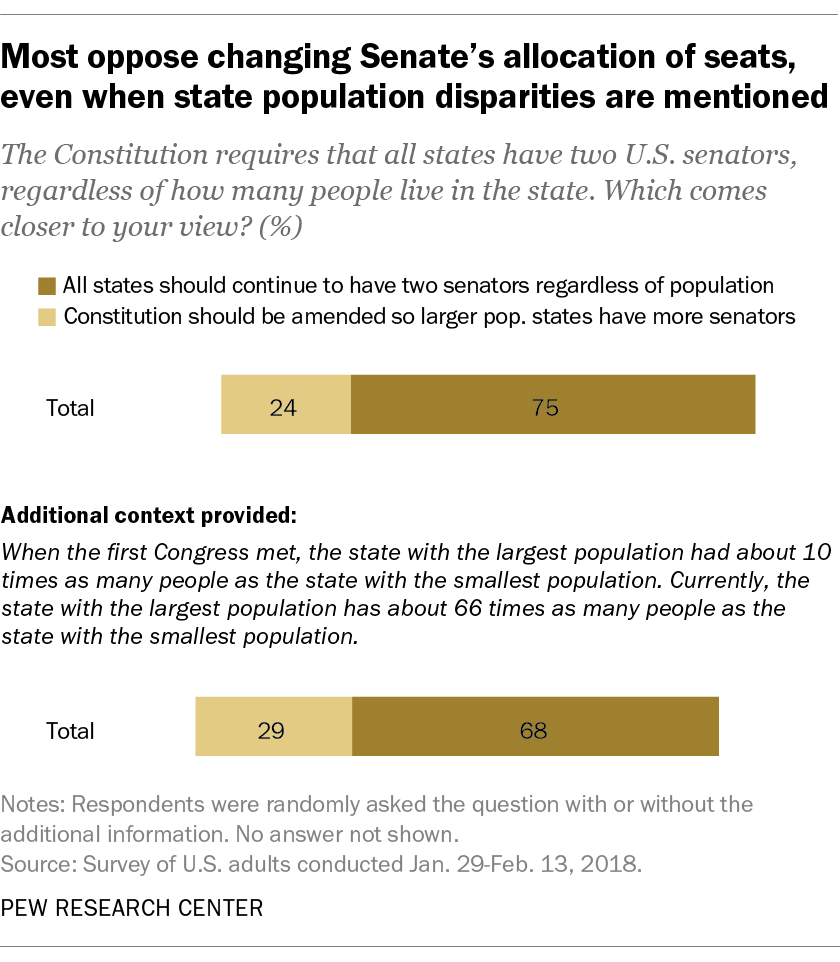

Survey experiments also allow researchers to measure how much context matters when asking a question. In an early 2018 survey, researchers asked one group of respondents whether the U.S. Senate should have two seats for every state, regardless of how many people live in the state. A second group of respondents received the same question but were given some additional context – specifically, that the population difference between the nation’s largest and smallest states had grown dramatically over time.

Adding that additional context mattered, but only modestly. Those given context were slightly more likely to support amending the Constitution so more populous states have more senators. But overall, across both versions of the question, a majority of Americans said all states should continue to have two senators regardless of population.

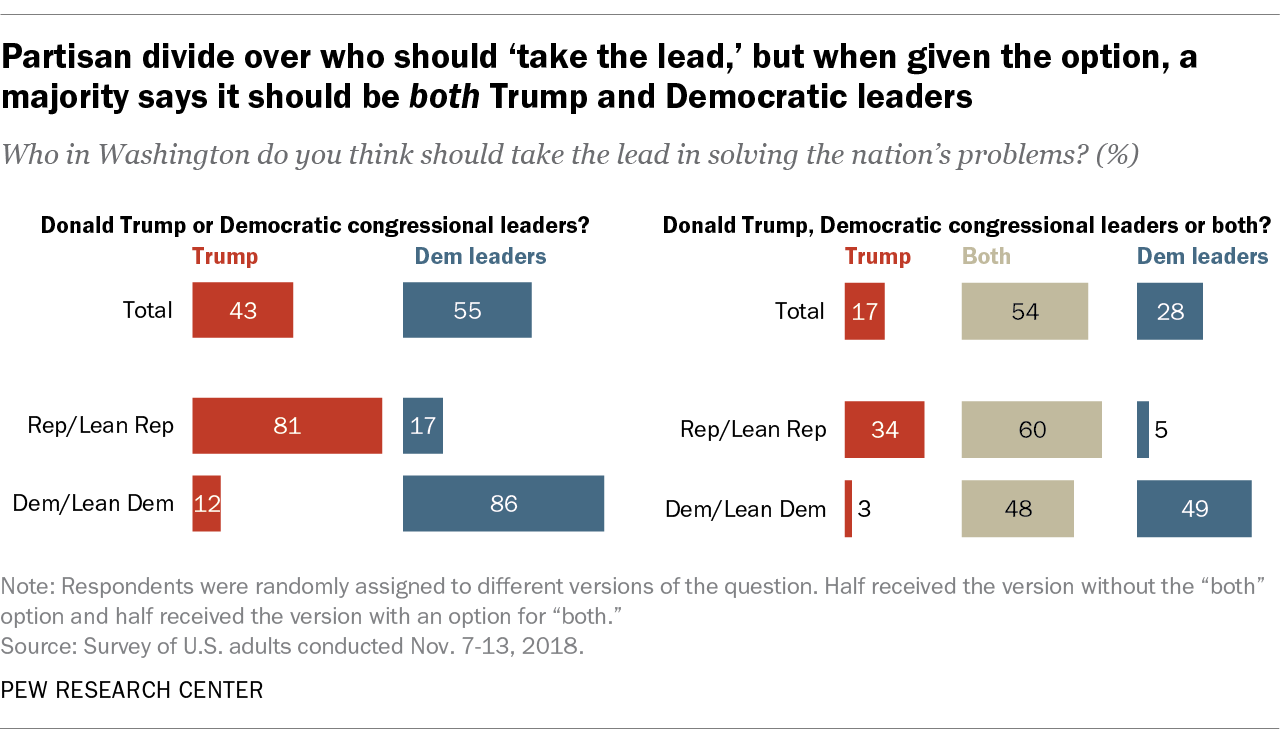

Another kind of survey experiment involves randomly assigning different answer choices to survey questions. A recent survey by the Center asked Americans whether Trump or Democratic congressional leaders should take the lead in solving the nation’s problems. One group of respondents saw only two possible answers: Trump or Democratic leaders. But another group saw an additional answer: both.

The experiment showed one consistent pattern: Regardless of the answer options, a greater share of the public said that Democrats should take the lead than said Trump should take the lead. But in the version of the question in which “both” was an explicit option, a 54% majority of Americans chose that response.

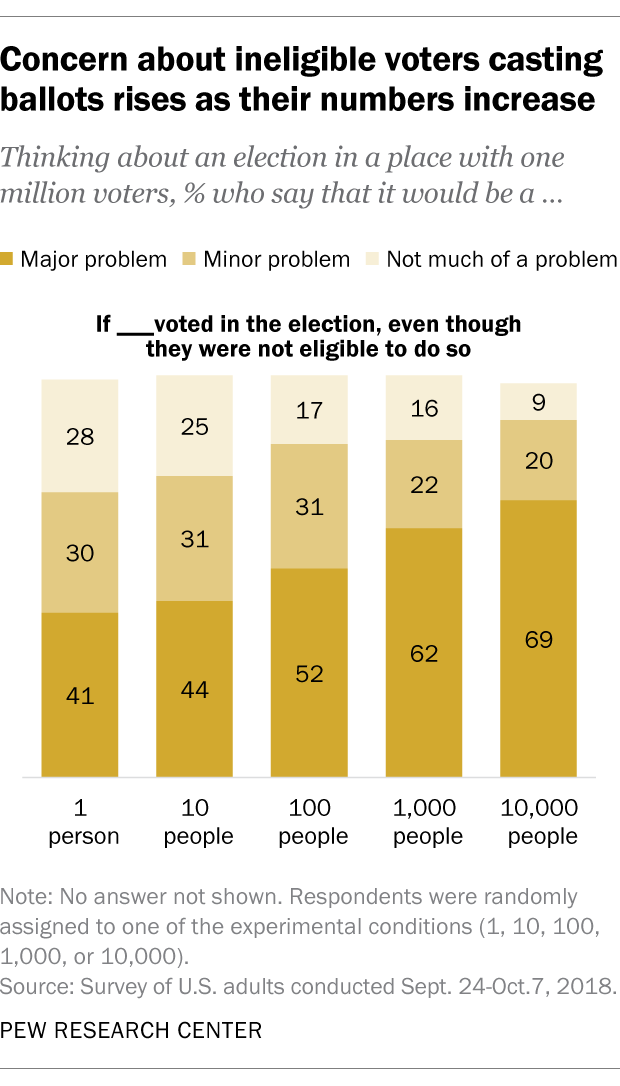

Survey experiments also may involve randomizing specific details within a question to better understand nuances in views. For example, a report about Americans’ views about elections included a question that described a hypothetical election with 1 million voters in which one person voted even though they were not eligible to do so. Around four-in-ten Americans (41%) said this would be a “major problem.”

But the question also included a set of variations that were randomly assigned. In those versions, the number of people who were described as voting despite being ineligible to vote changed. While some people received the scenario in which one person voted despite being ineligible, others were asked about 10, 100, 1,000, or 10,000 people voting despite being ineligible.

The experiment found that 69% of U.S. adults said that 10,000 people voting despite being ineligible was a major problem, compared with 41% who said the same about one ineligible person voting. Since respondents were randomly assigned into each version of the question, these differences can be attributed to the changing information itself, not the attributes of the people who responded.

These aren’t the only kinds of experiments survey researchers rely on. Another type, called question order experiments, involves randomizing the order of two or more questions to understand how the order might affect responses. If there are significant differences between respondents who received the questions in a different order, we can attribute these differences to the order. Something about the first question in the sequence (e.g., the topic or considerations mentioned) influenced how respondents interpreted the subsequent question(s).

Sometimes these differences can be substantively meaningful. But if a significant order effect is discovered, researchers will typically only report the results based on the set of respondents who received a particular item first among the set of randomized items. This is because researchers often want to capture opinions that are less likely to be influenced by the content of earlier questions.

Survey experiments give researchers a great deal of leverage when trying to understand why people express the opinions that they do. Since measuring public opinion depends on the questions researchers ask, it’s worth testing how people respond to them.

To learn more about survey question wording in general, watch this video.