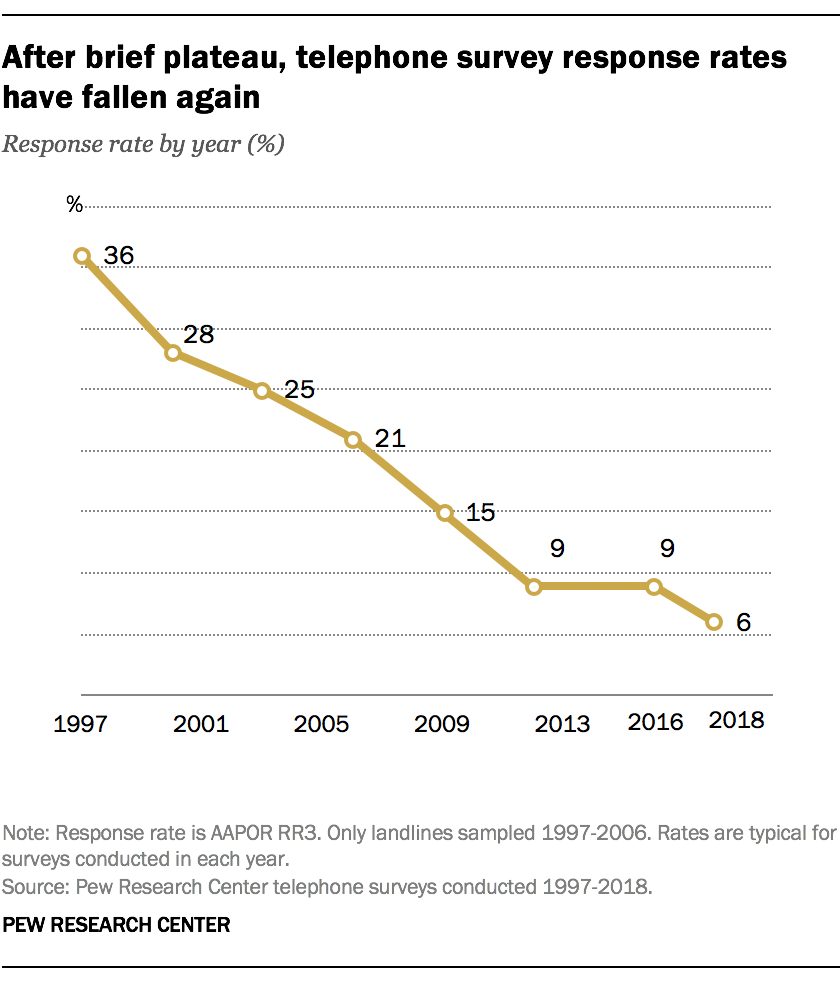

After stabilizing briefly, response rates to telephone public opinion polls conducted by Pew Research Center have resumed their decline.

In 2017 and 2018, typical telephone survey response rates fell to 7% and 6%, respectively, according to the Center’s latest data. Response rates had previously held steady around 9% for several years.

While the Center’s telephone survey protocol is somewhat different from those used by other organizations, conversations with contractors and other pollsters confirm that the pattern reported here is being experienced more generally in the industry.

Among the factors depressing participation in telephone polling may be the recent surge in automated telemarketing calls, particularly to cellphones. The volume of robocalls has skyrocketed in recent years, reaching an estimated 3.4 billion per month. Since public opinion polls typically appear as an unknown or unfamiliar number, they are easily mistaken for telemarketing appeals.

In addition, new technologies sometimes erroneously flag survey calls – even those conducted for the Centers for Disease Control and Prevention – as “spam.” Numerous cellphone operating systems, cellular carriers and third-party apps block incoming phone numbers or warn users that incoming numbers are from potential scammers, fraudsters or spammers.

For pollsters, these new challenges add to a long-standing set of reasons why some people may not respond to surveys, including concerns over intrusions on their time and privacy; people feeling too busy to participate; and a general lack of interest in taking surveys.

But low response rates don’t necessarily mean that telephone polling is completely broken. Studies examining the impact of low response on data quality have generally found that response rates are an unreliable metric of accuracy. Pew Research Center studies conducted in 1997, 2003, 2012 and 2016 found little relationship between response rates and accuracy, and other researchers have found similar results. In the 2018 midterm election, polls – including those conducted by phone with live interviewers – performed well by historical standards. Nonpartisan polls in 2018 were more accurate, on average, than midterm polls since 1998.

While low response rates don’t render polls inaccurate on their own, they shouldn’t be completely ignored, either. A low response rate does signal that the risk of error is higher than it would be with higher participation. The key issue is whether the attitudes and other outcomes measured in the poll are related to people’s decisions about taking the survey. In some cases, there is a relationship, but it is corrected by standard weighting adjustment. In other cases, such as when polls attempt to measure volunteerism, standard weighting falls short, resulting in biased estimates.

Another important concern is that low response rates lead to higher survey costs – sometimes much higher. This reality often forces survey organizations to make trade-offs in their studies, such as reducing sample size, extending field periods or reducing quality in other ways in order to shift resources to the interviewing effort. For a number of reasons – including the twin issues of declining response and rising costs – Pew Research Center now conducts most of its U.S. polling online using its American Trends Panel.

Related:

Main report: Growing and Improving Pew Research Center’s American Trends Panel

What our transition to online polling means for decades of phone survey trends

Q&A: Why and how we expanded our American Trends Panel to play a bigger role in our U.S. surveys