This project was designed and conducted by the Pew Research Center for the People & the Press. The staff includes Andrew Kohut, Scott Keeter, Michael Dimock, Carroll Doherty, Michael Remez, Leah Christian, Jocelyn Kiley, Rob Suls, Shawn Neidorf, Alec Tyson, Danielle Gewurz and Mary Pat Clark. Andrew Kohut, president of the Pew Research Center and director of the Pew Research Center for the People & the Press, originated this research project in 1997 and oversaw the replication studies in 2003 and 2012. In addition, Paul Taylor, executive vice president of the Pew Research Center and Greg Smith, senior researcher, Pew Forum on Religion & Public Life provided assistance.

The research design for the study was informed by the advice of an expert panel that included Jonathan Best, Mike Brick, Diane Colasanto, Larry Hugick, Courtney Kennedy, Jon Krosnick, Linda Piekarski, Mark Schulman, Evans Witt, and Cliff Zukin. Larry Hugick, Evans Witt, Jonathan Best, Julie Gasior and Stacy DiAngelo of Princeton Survey Research Associates, and the interviewers and staff at Princeton Data Source were responsible for data collection and management. The contribution of Survey Sampling International, which donated the telephone sample and demographic data for the project, is also gratefully acknowledged.

Survey Methodology

The analysis in this report is based on two telephone surveys conducted by landline and cell phone among national samples of adults living in all 50 states and the District of Columbia. One survey, conducted January 4-8, 2012 among 1,507 adults utilized Pew Research’s standard survey methodology (902 respondents were interviewed on a landline telephone and 605 were interviewed on a cell phone, including 297 who had no landline telephone). The other survey, conducted January 5-March 15, 2012 among 2,226 adults utilized additional methods to increase participation (1,263 respondents were interviewed on a landline telephone and 963 were interviewed on a cell phone, including 464 who had no landline telephone). For more on the additional methods used in the high-effort survey, see interviewing section. The surveys were conducted by interviewers at Princeton Data Source under the direction of Princeton Survey Research Associates International. Interviews for both surveys were conducted in English and Spanish.

Sample Design

Both the standard survey and the high-effort survey utilized the following sample design. A combination of landline and cell phone random digit dial samples were used; samples for both surveys were provided by Survey Sampling International. Landline and cell phone numbers were sampled to yield a ratio of approximately two completed landline interviews to each cell phone interview.

The design of the landline sample ensures representation of both listed and unlisted numbers (including those not yet listed) by using random digit dialing. This method uses random generation of the last two digits of telephone numbers selected on the basis of the area code, telephone exchange, and bank number. A bank is defined as 100 contiguous telephone numbers, for example 800-555-1200 to 800-555-1299. The telephone exchanges are selected to be proportionally stratified by county and by telephone exchange within the county. That is, the number of telephone numbers randomly sampled from within a given county is proportional to that county’s share of telephone numbers in the U.S. Only banks of telephone numbers containing three or more listed residential numbers are selected.

The cell phone sample is drawn through systematic sampling from dedicated wireless banks of 100 contiguous numbers and shared service banks with no directory-listed landline numbers (to ensure that the cell phone sample does not include banks that are also included in the landline sample). The sample is designed to be representative both geographically and by large and small wireless carriers.

Both the landline and cell samples are released for interviewing in replicates, which are small random samples of each larger sample. Using replicates to control the release of telephone numbers ensures that the complete call procedures are followed for all numbers dialed. The use of replicates also improves the overall representativeness of the survey by helping to ensure that the regional distribution of numbers called is appropriate.

Respondent Selection

Respondents in the landline sample were selected by randomly asking for the youngest male or female, 18 years of age or older who is now at home (for half of the households interviewers ask to speak with the youngest male first and for the other half the youngest female). If there is no eligible person of the requested gender at home, interviewers ask to speak with the youngest adult of the opposite gender, who is now at home. This method of selecting respondents within each household improves participation among young people who are often more difficult to interview than older people because of their lifestyles, but this method is not a random sampling of members of the household.

Unlike a landline phone, a cell phone is assumed in Pew Research polls to be a personal device. Interviewers ask if the person who answers the cell phone is 18 years of age or older to determine if the person is eligible to complete the survey. This means that, for those in the cell sample, no effort is made to give other household members a chance to be interviewed. Although some people share cell phones, it is still uncertain whether the benefits of sampling among the users of a shared cell phone outweigh the disadvantages.

Interviewing

Interviewing was conducted at Princeton Data Source under the direction of Princeton Survey Research Associates International. Interviews for the both surveys were conducted in English and Spanish. For the standard survey, a minimum of 7 attempts were made to complete an interview at every sampled landline and cell phone number. For the high-effort survey, a minimum of 25 attempts were made to complete an interview at every landline number sampled and a minimum of 15 attempts were made for every cell phone number. For both surveys, the calls were staggered over times of day and days of the week (including at least one daytime call) to maximize the chances of making contact with a potential respondent. Interviewing was also spread as evenly as possible across the field period. An effort was made to recontact most interview breakoffs and refusals to attempt to convert them to completed interviews.

In the standard survey, people reached on cell phones were offered $5 compensation for the minutes used to complete the survey on their cell phone. In the high-effort survey, a $10 monetary incentive was initially offered to everyone, regardless of what phone they were reached on. After the first five weeks of the field period, the monetary incentive was increased to $20 and all noncontacts and refusals in the landline frame for whom we could match an address to were sent a letter with a $2 incentive. Incentives and mailed letters were used in the high-effort survey because they have been shown to boost participation in many types of surveys. In the high-effort survey, interviewers also left voicemails when possible for both landlines and cell phones that introduced the study and mentioned the incentive. After the first five weeks of the high-effort survey, all calls were made by elite interviewers, who are experienced interviewers that have a proven record of persuading reluctant respondents to participate.

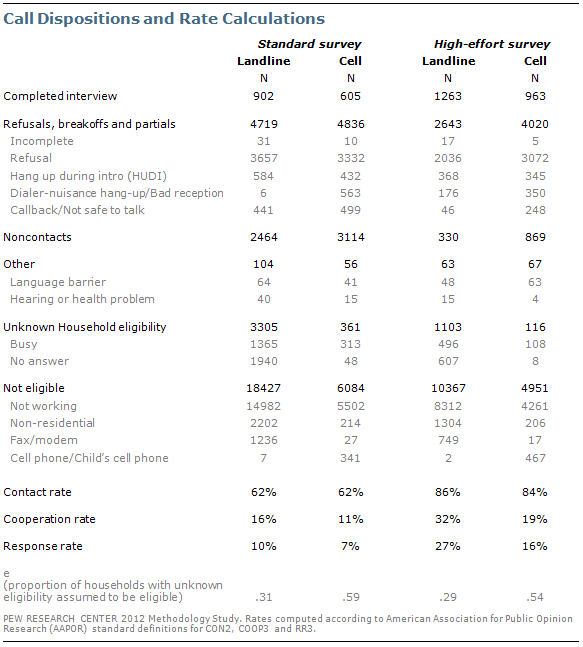

The combined response rate for the standard survey was 9%; 10% in the landline frame and 7% in the cell frame. This response rate is comparable to other surveys using similar procedures conducted by the Pew Research Center and other major opinion polls. The combined response rate for the high-effort survey was 22%; 27% in the landline frame and 16% in the cell frame. The response rate is the percentage of known or assumed residential households for which a completed interview was obtained. See table at end of methodology for full call dispositions and rate calculations. The response rate reported is the American Association for Public Opinion Research’s Response Rate 3 (RR3) as outlined in their Standard Definitions.

Weighting

The landline sample is first weighted by household size to account for the fact that people in larger households have a lower probability of being selected. In addition, the combined landline and cell phone sample is weighted to adjust for the overlap of the landline and cell frames (since people with both a landline and cell phone have a greater probability of being included in the sample), including the size of the completed sample from each frame and the estimated ratio of the size of the landline frame to the cell phone frame.

The sample is then weighted to population parameters using an iterative technique that matches gender, age, education, race, Hispanic origin and nativity, region, population density and telephone status and usage. The population parameters for age, education, race/ethnicity, and region are from the Current Population Survey’s March 2011 Annual Social and Economic Supplement and the parameter for population density is from the Decennial Census. The parameter for telephone status and relative usage (of landline phone to cell phone for those with both) is based on extrapolations from the 2011 National Health Interview Survey. The specific weighting parameters are: gender by age, gender by education, age by education, race/ethnicity (including Hispanic origin and nativity), region, density and telephone status and usage; non-Hispanic whites are also balanced on age, education and region. The weighting procedure simultaneously balances the distributions of all weighting parameters at once. The final weights are trimmed to prevent individual cases form having too much influence on the final results.

Sampling Error

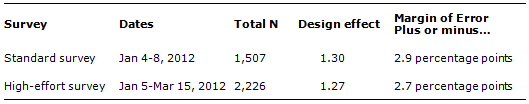

Sampling error results from collecting data from some, rather than all, members of the population. The standard survey of 1,507 adults had a margin of error of plus or minus 2.9 percentage points with a 95% confidence interval. This means that in 95 out of every 100 samples of the same size and type, the results we obtain would vary by no more than plus or minus 2.9 percentage points from the result we would get if we could interview every member of the population. Thus, the chances are very high (95 out of 100) that any sample we draw will be within 2.9 points of the true population value. The high-effort survey of 2,226 adults had a margin of error of plus or minus 2.7 percentage points. The margins of error reported and statistical tests of significance are adjusted to account for the survey’s design effect, a measure of how much efficiency is lost in the sample design and weighting procedures when compared with a simple random sample. The design effect for the standard survey was 1.30 and for the high-effort survey was 1.27.

The following table shows the survey dates, sample sizes, design effects and the error attributable to sampling that would be expected at the 95% level of confidence for the total sample:

In addition to sampling error, one should bear in mind that question wording and practical difficulties in conducting surveys can introduce error or bias into the findings of opinion polls

Government Benchmarks

Comparisons were made to benchmarks from several government surveys throughout the report. Many of the comparisons are from the Current Population Survey, including the March 2011 Annual Social and Economic Supplement, the September 2011 Volunteering Supplement, the November 2010 Voting Supplement and the Civic Engagement Supplement, as well as the October 2010 Computer and Internet Use Supplement. Comparisons are also made to the 2010 National Health Interview Survey. For most comparisons, an effort was made to match the question wording used in the survey to that used in the government survey. See the topline for full details about which survey a benchmark is from and whether there are any differences in the question wording.