Teacher survey methodology

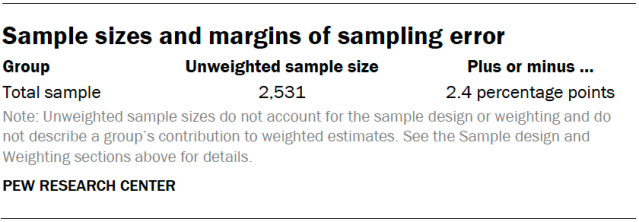

The data in this report comes from a self-administered web survey of K-12 public school teachers in the United States. It was conducted online in English from Oct. 17 to Nov. 14, 2023. Out of 6,357 teachers who were sampled, 191 were screened out as no longer eligible. A total of 2,531 completed the survey, for a completion rate of 41.0%. The margin of sampling error for the full sample of 2,531 teachers is plus or minus 2.4 percentage points. The survey was administered by RAND on its American Teacher Panel, a nationally representative panel of public K-12 teachers.

The American Teacher Panel is recruited through probability-based methods from a commercially available list of U.S. public school teachers. The sampling frame for recruiting teachers to the panel was acquired from MDR Education. It is intended to be as comprehensive as possible, yet likely underrepresents new teachers, or experienced teachers new to a school or district. The American Teacher Panel began in 2014 and currently includes more than 25,000 teachers. Teachers recruited to the American Teacher Panel have agreed to participate in online surveys several times per school year and receive incentives for completing surveys.

Teachers selected to join the American Teacher Panel are first sent an invitation packet via FedEx that includes a prepaid $10 gift card as an incentive. Reminder emails are sent to nonrespondents whose contact information includes an email address. The American Teacher Panel has an overall empanelment rate of 30%. Read additional details about the American Teacher Panel’s design and methodology.

Sample design

The target population for this survey was K-12 public school teachers in the United States. A total of 6,357 teachers were invited to participate in this survey. This includes an initial sample of 5,000 teachers and a supplementary sample of 1,361 teachers that was later drawn to achieve the targeted sample size of 2,500 completed interviews. Because teachers are invited to join the American Teacher Panel at varying rates, each teacher’s probability of selection for this survey was inversely proportional to their estimated probability of membership in the American Teacher Panel. This is done so that each teacher has approximately equal probabilities of having both joined the American Teacher Panel and been selected for this survey.

Data collection protocol

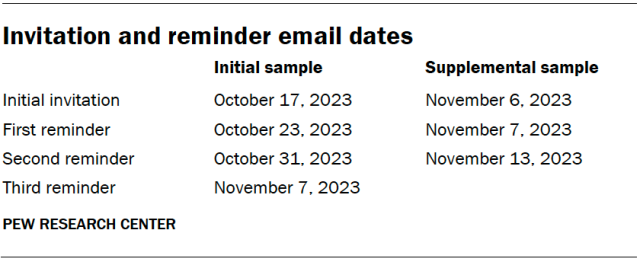

The field period for this survey was Oct. 17-Nov. 14, 2023. After an initial invitation email, nonresponding teachers in the initial sample received up to three reminder emails. Nonrespondents in the supplemental sample received up to two reminder emails.

Weighting

The data was weighted in a multistep process that accounts for multiple stages of sampling and nonresponse that occur at different points in the survey process. Every American Teacher Panel member begins with a design weight that accounts for their initial probability of selection for their recruitment survey. That weight is further calibrated so that the composition of the entire panel aligns with that of the national population of public school teachers based on data from the National Center for Education Statistics on a combination of school and teacher characteristics.

Weighting parameters for the following school-level characteristics are based on the 2021-2022 Common Core of Data (CCD):

- School level

- School percent free or reduced-price lunch

- School percent minority

- School size

- School locale

Weighting parameters for the following teacher-level characteristics are based on the 2020-2021 National Teacher and Principal Survey (NTPS):

- Teacher gender

- Teacher race

- Teacher experience

Among respondents, this weight is first adjusted to account for each teacher’s probability of selection for this wave. A second adjustment is then applied to account for each respondent’s probability of completing the survey after having been selected. This probability is estimated based on teacher and school characteristics using a model. In a third step, the nonresponse-adjusted weights are once again calibrated so that the composition of the sample aligns with that of the target population based on the weighting parameters listed above. Finally, the weights were trimmed at the 95th percentile to reduce the loss in precision stemming from variance in the weights.

The following table shows the unweighted sample sizes and the error attributable to sampling that would be expected at the 95% level of confidence for different groups in the survey.

Sample sizes and sampling errors for other subgroups are available upon request. In addition to sampling error, one should bear in mind that question wording and practical difficulties in conducting surveys can introduce error or bias into their findings.

School characteristics

The American Teacher Panel includes NCES school ID codes which allowed us to match the teachers surveyed to their school characteristics provided by NCES’s Common Core of Data. Data on the following school characteristics are based on those reported by the NCES: school level (elementary, middle or high), school size, location, poverty level (based on percentage students eligible for free or reduced-price lunch) and student demographics.

School district characteristics

Political leaning of school districts is based on whether the majority of those residing in the school district voted for Republican Donald Trump or Democrat Joe Biden in the 2020 presidential election. Vote shares for each school district from the 2020 general election are calculated using 2020 General Election Results Disaggregated to 2020 Census Blocks Datasets available at the Redistricting Data Hub. This block-level data was aggregated up to the school district level, using block-to-school district relation files from Missouri Census Data Center’s Geographic Correspondence Engine (Geocorr 2022).

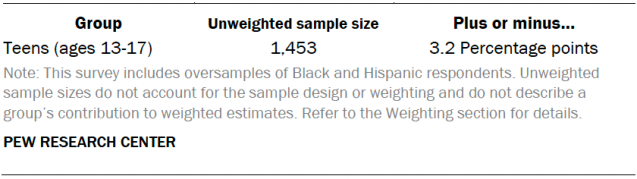

Teen survey methodology

The analysis on teens’ views is based on a self-administered web survey conducted from Sept. 26 to Oct. 23, 2023, among a sample of 1,453 U.S. teens ages 13 to 17. The margin of sampling error for the full sample of 1,453 teens is plus or minus 3.2 percentage points. The survey was conducted by Ipsos Public Affairs in English and Spanish using KnowledgePanel, its nationally representative online research panel.

The research plan for this project was submitted to an external institutional review board (IRB), Advarra, which is an independent committee of experts that specializes in helping to protect the rights of research participants. The IRB thoroughly vetted this research before data collection began. Due to the risks associated with surveying minors, this research underwent a full board review and received approval (Approval ID Pro00073203).

KnowledgePanel members are recruited through probability sampling methods and include both those with internet access and those who did not have internet access at the time of their recruitment. KnowledgePanel provides internet access for those who do not have it and, if needed, a device to access the internet when they join the panel. KnowledgePanel’s recruitment process was originally based exclusively on a national random-digit-dialing (RDD) sampling methodology. In 2009, Ipsos migrated to an address-based sampling (ABS) recruitment methodology via the U.S. Postal Service’s Delivery Sequence File (DSF). The Delivery Sequence File has been estimated to cover as much as 98% of the population, although some studies suggest that the coverage could be in the low 90% range.2

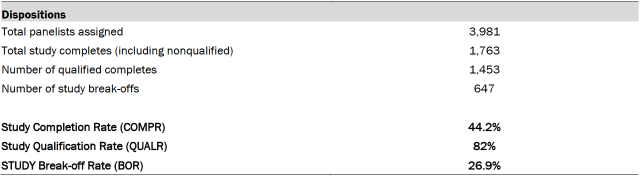

Panelists were eligible for participation in this survey if they indicated on an earlier profile survey that they were the parent of a teen ages 13 to 17. A random sample of 3,981 eligible panel members were invited to participate in the study. Responding parents were screened and considered qualified for the study if they reconfirmed that they were the parent of at least one child ages 13 to 17 and granted permission for their teen who was chosen to participate in the study. In households with more than one eligible teen, parents were asked to think about one randomly selected teen and that teen was instructed to complete the teen portion of the survey. A survey was considered complete if both the parent and selected teen completed their portions of the questionnaire, or if the parent did not qualify during the initial screening.

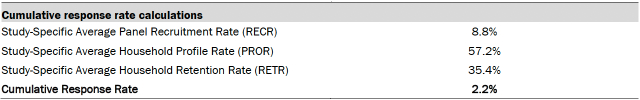

Of the sampled panelists, 1,763 (excluding break-offs) responded to the invitation and 1,453 qualified, completed the parent portion of the survey, and had their selected teen complete the teen portion of the survey, yielding a final stage completion rate of 44% and a qualification rate of 82%. The cumulative response rate accounting for nonresponse to the recruitment surveys and attrition is 2.2%. The break-off rate among those who logged on to the survey (regardless of whether they completed any items or qualified for the study) is 26.9%.

Upon completion, qualified respondents received a cash-equivalent incentive worth $10 for completing the survey. To encourage response from non-Hispanic Black panelists, the incentive was increased from $10 to $20 on Oct. 5, 2023. The incentive was increased again on Oct. 10, 2023, from $20 to $40; then to $50 on Oct. 17, 2023; and to $75 on Oct. 20, 2023. Reminders and notifications of the change in incentive were sent for each increase.

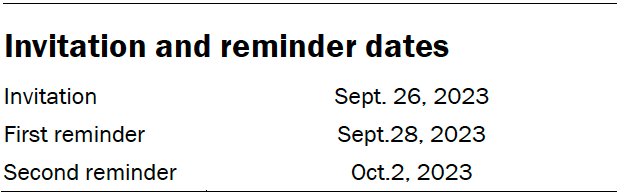

All panelists received email invitations and any non-responders received reminders, shown in the table. The field period was closed on Oct. 23, 2023.

Weighting

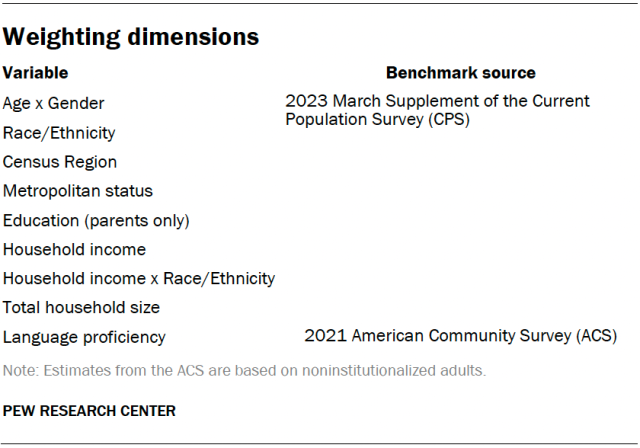

The analysis in this report was performed using separate weights for parents and teens. The parent weight was created in a multistep process that begins with a base design weight for the parent, which is computed to reflect their probability of selection for recruitment into the KnowledgePanel. These selection probabilities were then adjusted to account for the probability of selection for this survey which included oversamples of Black and Hispanic parents. Next, an iterative technique was used to align the parent design weights to population benchmarks for parents of teens ages 13 to 17 on the dimensions identified in the accompanying table, to account for any differential nonresponse that may have occurred.

To create the teen weight, an adjustment factor was applied to the final parent weight to reflect the selection of one teen per household. Finally, the teen weights were further raked to match the demographic distribution for teens ages 13 to 17 who live with parents. The teen weights were adjusted on the same teen dimensions as parent dimensions with the exception of teen education, which was not used in the teen weighting.

Sampling errors and tests of statistical significance take into account the effect of weighting. Interviews were conducted in both English and Spanish.

In addition to sampling error, one should bear in mind that question wording and practical difficulties in conducting surveys can introduce error or bias into the findings of opinion polls.

The following table shows the unweighted sample sizes and the error attributable to sampling that would be expected at the 95% level of confidence for different groups in the survey:

Sample sizes and sampling errors for subgroups are available upon request.

Dispositions and response rates

The tables below display dispositions used in the calculation of completion, qualification and cumulative response rates.3

General public survey methodology

Ipsos KnowledgePanel and Omnibus methodology

Ipsos delivers affordable, statistically valid online research through KnowledgePanel®. KnowledgePanel is the first and largest online research panel that is representative of the entire U.S. population. Panel members are randomly recruited through probability-based sampling, and households are provided with access to the Internet and hardware if needed.

Ipsos recruits panel members using address-based sampling (ABS) methods to ensure full coverage of all households in the nation. Once household members are recruited for the panel and assigned to a study sample, they are notified by email for survey taking, or panelists can visit their online member page for survey taking (instead of being contacted by telephone or postal mail). This allows surveys to be fielded quickly and economically. In addition, this approach reduces the burden placed on respondents, since email notification is less intrusive than telephone calls and the self-administered mode minimizes social desirability bias and positivity effects that can be present with an interviewer. Many respondents find answering online questionnaires more interesting and engaging than being questioned by a telephone interviewer. Furthermore, respondents have the convenience to choose what day and time to complete their assigned survey.

KnowledgePanel methodology

KnowledgePanel provides probability-based samples with an “organic” representation of the study population for measurement of public opinions, attitudes and behaviors. The panel was first developed in 1999 by Knowledge Networks, an Ipsos company. Panel members are randomly selected so that survey results can properly represent the U.S. population with a measurable level of accuracy and a calculable response rate, features that are not obtainable from nonprobability or opt-in online panels.

KnowledgePanel’s recruitment process was originally based exclusively on a national random-digit dial (RDD) sampling methodology. In 2009, in light of the growing proportion of cellphone-only households, Ipsos migrated to an ABS recruitment methodology via the U.S. Postal Service’s Delivery Sequence File. ABS not only improves population coverage, but also provides a more effective means for recruiting hard-to-reach individuals, such as cellphone-only households, non-internet households, young adults and persons of color. Households without an internet connection are provided with a web-enabled device and free internet service.

After initially accepting the invitation to join the panel, participants are asked to complete a short demographic survey (the initial Core Profile Survey); answers to this survey allow efficient panel sampling and weighting for future surveys. Upon completing the Core Profile Survey, participants become active panel members. All panel members are provided privacy and confidentiality protections.

Adults from sampled households are invited to join KnowledgePanel through a series of mailings, including an initial invitation letter, a reminder postcard and a subsequent follow-up letter. Moreover, telephone refusal-conversion calls are made to nonresponding households for which a telephone number could be matched to a physical address. Invited households can join the panel by:

- Completing and mailing back a paper form in a postage-paid envelope

- Calling a toll-free hotline phone number maintained by Ipsos

- Going to a designated Ipsos website and completing the recruitment form online

KnowledgePanel LatinoSM recruitment

In 2008, KnowledgePanel LatinoSM was developed to provide researchers with the capability to conduct representative online surveys with U.S. Hispanics, including both English- and Spanish-dominant Hispanics. With the advent of KnowledgePanel Latino, the first U.S. online panel representative of Hispanics was established to include those without internet access and those who only speak Spanish. Hispanic members recruited through KnowledgePanel’s traditional ABS sampling methodology described above are supplemented with recruitment using a custom dual-frame RDD sampling methodology targeting telephone exchanges associated with census blocks that have a 65% or greater Latino population density (this density level covers just over 50% of the U.S. Hispanic population). Moreover, cellular numbers from rates centers with high concentration of Hispanics are also used to improve the representation of samples. With this telephone recruitment, households are screened in the Spanish language to only recruit those homes where Spanish is spoken at least half the time.

Household member recruitment

During the initial recruitment survey, all household members are enumerated. Following enumeration, attempts are made to recruit every household member who is at least 13 years old to participate in KnowledgePanel surveys. For household members ages 13 to 17, consent is collected from the parents or the legal guardian during the initial recruitment interview. No direct communication with teenagers is attempted before obtaining parental consent.

Survey sampling from KnowledgePanel

Once panel members are recruited and profiled by completing KnowledgePanel’s Core Profile Survey, they become eligible for selection for client surveys. Typically, specific survey samples are based on an equal probability selection method (EPSEM) for general population surveys. Customized stratified random sampling based on “profile” data can also be implemented as required by the study design. Profile data can also be used when a survey calls for pre-screening – that is, members are drawn from a subsample of the panel, such as females, Republicans, grocery shoppers, etc. (This can reduce screening costs, particularly for lower incidence subgroups.) In such cases, Ipsos ensures that all subsequent survey samples drawn that week are selected in such a way as to result in a sample that remains representative of the population distributions.

As detailed above, significant resources and infrastructure are devoted to the recruitment process for KnowledgePanel so that its active panel members can properly represent the adult population of the United States. This representation is achieved not only with respect to a broad set of geodemographic indicators, but also for hard-to-reach adults (such as those without internet access or Spanish-language-dominant Hispanics) who are recruited in proper proportions. Consequently, the raw distribution of KnowledgePanel mirrors that of the U.S. adults fairly closely, barring occasional disparities that emerge for certain subgroups due to differential recruitment and attrition.

For selection of general population samples from KnowledgePanel, a patented methodology has been developed such that samples from the panel behave as EPSEM samples. Briefly, this methodology starts by weighting the pool of active members to the geodemographic benchmarks secured from a combination of the U.S. Census Bureau’s American Community Survey (ACS) and the latest March supplement of the Census Bureau’s Current Population Survey (CPS) along several dimensions. Typically, the geodemographic dimensions used for weighting the entire KnowledgePanel include the following dimensions, with additional nesting of dimensions as well:

- Gender (male/female)

- Age (18 to 29, 30 to 44, 45 to 59, and 60+)

- Race/Hispanic ethnicity (White/non-Hispanic, Black/non-Hispanic, Other or 2+ races/non-Hispanic, Hispanic)

- Education (less than high school, high school, some college, bachelor’s and beyond)

- Census Region (Northeast, Midwest, South, West)

- Household income (under $10,000, $10,000 to $24,999, $25,000 to $49,999, $50,000 to $74,000, $75,000 to $99,999, $100,000 to $149,999, and $150,000+)

- Home ownership status (own, rent/other)

- Household size (1, 2, 3, 4+)

- Metropolitan area (yes, no)

- Hispanic origin (Mexican, Puerto Rican, Cuban, other, non-Hispanic)

- Language dominance (non-Hispanic and English dominant, bilingual, and Spanish dominant Hispanic) when survey is administered in both English and Spanish

Using the resulting weights as measures of size, a probability-proportional-to-size (PPS) procedure is used to select study specific samples. It is the application of this PPS methodology with the imposed size measures that produces demographically balanced and representative samples that behave as EPSEM. Moreover, in instances where a study design requires any form of oversampling of certain subgroups, such departures from an EPSEM design are accounted for by adjusting the design weights in reference to the census benchmarks for the population of interest.

Survey administration

Once assigned to a survey, members receive a notification email letting them know there is a new survey available for them to complete. This email notification contains a link that sends them to the survey. No login name or password is required. The field period depends on the client’s needs and can range anywhere from a few hours to several weeks.

Typically, after three days, automatic email reminders are sent to all nonresponding panel members in the sample. Additional email reminders are sent and custom reminder schedules are set up as needed. To assist panel members with their survey taking, each individual has a personalized member portal listing all assigned surveys that have yet to be completed.

Ipsos also operates an ongoing modest incentive program to encourage participation and create member loyalty. The incentive program includes special raffles and sweepstakes with both cash rewards and other prizes to be won. On average, panel members complete three to four surveys per month with durations of about 10 to 15 minutes per survey. An additional incentive is usually provided for longer surveys.

Response rates

As a member of the American Association of Public Opinion Research (AAPOR), Ipsos follows the AAPOR standards for response rate reporting. While the AAPOR standards were established for single survey administrations and not for multistage panel surveys, Ipsos uses the Callegaro-DiSogra (2008) algorithms for calculating KnowledgePanel survey response rates.

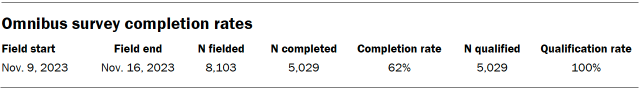

Omnibus survey completion rates

The field period and completion and qualification rates for this survey are presented below:

Data Processing to Ensure Data Quality

Respondents are unable to complete the survey more than once (after completing the survey one time, respondents no longer have access to the survey). All respondents were considered eligible. No interviews were dropped. Data were imputed for demographics used in weighting if the respondent declined to answer. Ipsos did not conduct any additional data imputation for this survey.

Survey Cooperation Enhancements

As standard with KnowledgePanel surveys, email reminders were sent to non-responders on Day 3 of the field period. Additional reminders were sent to any remaining non-responders on Days 5 and 7 of the field period.

Upon completion of the survey, qualified respondents received a cash-equivalent incentive based on their sample classification and when they completed the survey.

Limitations of the Design and Data Collection

All forms of public opinion research are subject to unmeasured error that cannot be eliminated. When a probability-based panel like KnowledgePanel is used, Ipsos employs the total survey error approach to identify and minimize error due to coverage error, sampling error, nonresponse error, measurement error, and data processing and editing error. Coverage error is addressed in KnowledgePanel recruitment strategies. Sampling error is addressed in recruitment and at the time of sample selection for each study. Nonresponse error is reduced in recruitment, study sampling, panel management strategies (including communication, incentive, and retention protocols), and weighting of the final data. These strategies support the computation of sampling error to estimate the extent to which the results from the sample might differ from population values. To reduce measurement error, Ipsos research staff evaluates questionnaires in terms of item flow, wording, and response formats to provide respondent-friendly surveys and elicit high-quality data. Additionally, Ipsos conducts a quality control review of data processing steps and any data cleaning to minimize errors.

Ipsos KnowledgePanel weighting

Once all survey data have been collected and processed, design weights are adjusted to account for any differential nonresponse that may have occurred. Depending on the specific target population for a given study, geodemographic distributions for the corresponding population are obtained from the CPS, the U.S. Census Bureau’s American Community Survey (ACS), or in certain instances from the weighted KnowledgePanel profile data. For this purpose, an iterative proportional fitting (raking) procedure is used to produce the final weights. In the final step, calculated weights are examined to identify and, if necessary, trim outliers at the extreme upper and lower tails of the weight distribution. The resulting weights are then scaled to aggregate to the total sample size of all eligible respondents.

For this study, the following benchmark distributions of U.S. adults age 18 and over from the 2023 CPS were used for the raking adjustment of weights:

- Gender (male, female) by Age (18 to 29, 30 to 44, 45 t0 59, and 60+)

- Race/Hispanic ethnicity (White/non-Hispanic, Black/non-Hispanic, Other or 2+ Races/non-Hispanic, Hispanic)

- Education (high school graduate or less, some college, bachelor’s and beyond)

- Census region (Northeast, Midwest, South, West)

- Metropolitan status (Metro, non-Metro)

- Household income (Less than $24,999, $25,000 to $49,999, $50,000 to $74,999, $75,000 to $99,999, $100,000 to $149,999, $150,000+)

- Race Ethnicity (White or Other, Black, Hispanic) by Gender (male, female)

- Race Ethnicity (White or Other, Black, Hispanic) by Age (18-44, 45+)

- Race Ethnicity (White or Other, Black, Hispanic) by Education (less than college, bachelor’s or higher)

Design Effect and Margin of Sampling Error*

Technically a margin of sampling error (MOSE) is survey- and estimate-specific. It is affected by the survey estimate (point estimate, proportion, mean, etc.), the number of cases, and the unequal weighting effect (often called a design effect). Here we provide the MOSE at the 95% confidence level for a point estimate equal to 50% (when the MOSE is at its widest) and when all respondents are accounted for.

weight: 1.10 design effect, 1.4% MOSE (95% level)

* Margin of error is inclusive of the design effect.