Recruitment

All current members of the American Trends Panel (ATP) were originally recruited from the 2014 Political Polarization and Typology Survey, a large (n=10,013) national landline and cellphone RDD survey conducted Jan. 23- March 16, 2014, in English and Spanish. At the end of that survey, respondents were invited to join the panel. The invitation was extended to all respondents who use the internet (from any location) and also to most respondents who do not use the internet.1

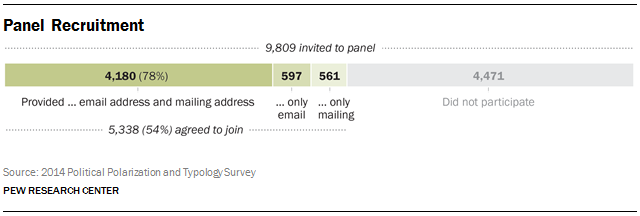

Of the 10,013 adults interviewed, 9,809 were invited to take part in the panel. Half were invited to “participate in future surveys,” while the other half were invited to “join our American Trends Panel” as part of an experiment to test the best way to frame panel participation. All other characteristics of the panel were explained to respondents in exactly the same way.

Respondents were told they would be paid $10 for agreeing to participate in monthly surveys on different topics, and if they were an internet user they were told the surveys would be taken online. Additionally, they would be given $5 for each survey they completed. Hispanic respondents and young adults ages 18-25 were offered $10 per survey because these groups historically have been less likely than others to take part in panels. They were then asked for their email and mailing addresses. The 5,338 respondents who initially agreed to join the panel were then sent a welcome packet in either English or Spanish as appropriate. The 4,741 panelists who provided a mailing address were mailed a packet which contained a cover letter, a $10 bill for agreeing to join the panel and a brochure about the panel. Both the letter and the brochure contained the Web address for the ATP website (with English and Spanish webpage content). The 4,777 panelists who provided an email address were also emailed the cover letter that had a link to the ATP website and a digital copy of the ATP brochure. Included in the 4,777 are the 597 panelists who provided only an email address and no mailing address. They were separately emailed a $10 Amazon gift card for joining.

Monthly Data Collection Protocol

Once empaneled, panelists were sent approximately one survey each month on a variety of topics. These were in English or Spanish based on each panelist’s preference. The length of the surveys varied between 15 and 20 minutes. Topics covered included politics, media consumption, personality traits, religion, the internet, use of technology and knowledge about science and other subjects. Panelists with internet access (“Web panelists”) took the surveys online, while the panelists without internet access, and a small number of panelists who had the internet but didn’t have or wouldn’t provide an email address (“non-Web panelists”), took the surveys via another mode. This second mode was typically mail, but telephone mode was used in two instances (once in the first panel wave, and again in Wave 5 for an experiment in testing the effects of mode of administration).

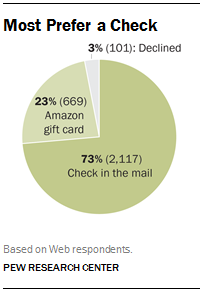

For each survey the Web panelists were sent an email invite with a unique link to the survey questionnaire. They were also mailed a postcard concurrently alerting them to check their email inbox for the next survey invitation. Up to four email reminders were sent to Web non-respondents. Once they had taken the survey they were sent their contingent incentive. At the end of the first panel survey, we asked respondents if they would prefer the money as an Amazon gift card or as a check, and this payment method was used for the subsequent panel waves.

Non-Web panelists received up to three mailings for each survey. The first full mailing was sent via first class mail for English-speaking panelists and via priority mail for Spanish-speaking panelists to convey importance and to make up time lost due to translation. The mailing included a cover letter with a prepaid incentive of a $5 (or $10) bill affixed with a glue dot. The mailing also contained a questionnaire in English or Spanish and a self-addressed, stamped return envelope. All respondents were sent a reminder postcard. Then a second full mailing was sent to all mail panelists with a note to disregard if the questionnaire had already been returned. This second mailing, sent via first class mail to English-speaking respondents and priority mail to Spanish speakers, contained a cover letter, questionnaire and self-addressed, stamped return envelope but no new incentive.

Special Non-Respondent Follow-up Wave

During the latter part of data collection for Wave 1, a special non-respondent follow-up survey was conducted to attempt to verify contact information and to determine whether persons recruited to the panel who had not yet responded to Wave 1 intended to participate in that wave or future waves. A total of 2,228 panelists who had not yet responded to Wave 1 were called between April 17-27, yielding 1,124 completed interviews. Only 34 respondents indicated a desire to be removed from the panel. Many respondents indicated that they had not received a welcome packet, or had changed their mailing address or email address. A total of 332 individuals subsequently completed Wave 1, and another 54 started the Wave 1 interview but did not complete it by the time the survey closed. For operational purposes, this non-response, follow-up study is considered to be Wave 2 of the panel, though it was not designed to represent the general population and has no substantive questions in it. It is not included in any tables in this report.

Weighting the Panel Data

Once the data collection ends, typically four weeks from the start, the mail and Web data are combined and weighted. The monthly panel weighting protocol uses the original base weights from the recruitment telephone survey, which account for each panelist’s probability of selection. The base weight uses a single frame estimation to adjust for the probability that the respondent’s phone number was selected from the sampling frame, the overlap in the landline and cellphone frames, and the within-household selection in the landline sample. For a subset of the panelists, an additional adjustment is included in the base weight to account for the fact that they belong to a group that was subsampled for invitation to the panel.

The next step in the weighting process is a propensity adjustment for nonresponse to the panel invitation, which in later waves was updated to account for other forms of attrition. Of 9,809 telephone respondents who were invited to join the panel, 5,338 (54.4%) accepted. A propensity score adjustment was computed to correct for differential nonresponse to the panel invitation. A logistic regression model was estimated in which accepting the panel invitation was regressed on sampling frame (landline vs. cell), incentive amount ($5/$10 per survey), internet user, race, marital status, child in the household, age, education, religious service attendance, household income, frequency of voting, opinion of the Tea Party movement (agree with/disagree with), whether or not they contacted an elected official in the last two years, political ideology, and statistically significant 2-way interactions (p<.05). Hispanic ethnicity was excluded from the model because Hispanics were offered a different incentive than most non-Hispanics. Gender and the number of adults in the household were not predictive and excluded from the model. The set of predictors considered for the model are variables that are routinely measured in surveys conducted for Pew Research Center. The estimated propensities were used to divide cases into approximately equal size groups using the quintiles of the estimated propensity score. The propensity score adjustment was computed as the inverse of the response rate in each quintile. This quintile approach helps to protect against model misspecification, relative to using the inverse of the response propensities.

The next step in the weighting process is post-stratification to target population parameters. The propensity-adjusted base weights for the panelists responding to a particular panel survey are calibrated to population benchmarks using raking, or iterative proportional fitting. This adjustment is designed to reduce the risk of bias stemming from nonresponse at the various stages of the panel design (the RDD survey used for recruitment, the invitation to join the panel and the panel survey). The raking dimensions include age, gender, education, race, ethnicity and Census region from the most recently available American Community Survey. Population density is based on data from the 2010 Decennial Census. Telephone service parameters (landline only, cellphone only and both landline and cellphone) are projections based on the most recent National Health Interview Survey Wireless Substitution Early Release Report. Internet access (user vs. non-user) as measured in the 2014 Political Polarization and Typology Survey (the recruitment survey) is also included as a parameter. This is because most panelists take the surveys online, so there is a concern that internet users could be over-represented in the survey estimates if this dimension is not controlled in the raking. Finally, party affiliation (Republican, Lean Republican, No Lean, Lean Democrat, Democrat) is included using as a parameter the average from the three most recent dual-frame RDD monthly surveys conducted for Pew Research Center. The rationale for this was a concern that panelists with a particular political affiliation might respond to panel surveys at a higher rate than other groups; however this concern proved to be unfounded, as the unweighted distribution of cases on party affiliation typically tracks the average used as a parameter. All raking targets are based on the non-institutionalized U.S. adult (age 18+) population.

The final step in the weighting process is trimming. The distribution of the raked weights is trimmed to reduce extreme values. Panel waves are typically trimmed at the 1st and 99th or the 2nd and 98th percentiles.