In April 2023, Pew Research Center published an analysis of mission statements from K-12 school districts across the United States. Along with our analysis of this nationally representative sample of districts, we also released the texts of the 1,314 mission statements we collected so that other researchers could use them. Before releasing the raw text, we used automated tools to redact the school districts’ names and other information that might allow them to be identified.

Related: Dataset on School District Mission Statements

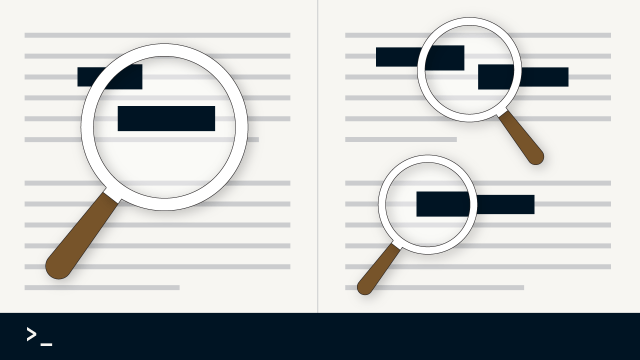

De-identification is a normal step in the publication of research data. But it can be tricky to redact unstructured data that isn’t arranged in any kind of predetermined format.

In structured or tabular data, identifying or sensitive pieces of information are often recorded under variable names such as “Name” or “Address.” That makes de-identification a relatively easy task.

In unstructured data, however, there are no labels or indications of where those identifiers are. The words we want to remove might be found in multiple sections of a document or used in inconsistent ways, making them hard to identify. K-12 school mission statements are a good example of unstructured text data that can get lengthy with rich information.

Consider this example mission statement text for a fictional K-12 district we’ll refer to as the “Marvelous School District”:

At Marvelous School District, our mission is to inspire and empower every student to reach their fullest potential, fostering a lifelong love for learning and a strong sense of community. Guided by innovation, inclusivity, and academic excellence, we are committed to creating an educational environment that nurtures not only academic achievement but also the development of character, critical thinking skills, and a global perspective.

When redacting this text, we want to remove identifying words and phrases like “Marvelous School District,” but avoid removing too much context so as not to diminish the utility of the data. To achieve these goals, we needed an approach that:

- Correctly flagged words and phrases containing identifying information we wanted to remove (maximized true positives)

- Avoided flagging words and phrases that did not contain identifying information and we wanted to keep (minimized false positives)

In the end, we used three methods to identify and remove the names of the school districts in our sample:

- Exact name matching based on a list of known school district names

- Named Entity Recognition (NER) to identify text sequences that are likely to be names of organizations without researchers needing to provide specific patterns to look for in the text

- Text processing with regular expressions to detect character patterns in the text that often indicate school district names

We also used regular expressions to redact identifying words and phrases other than school district names, such as addresses or district numbers.

In this post, we’ll focus solely on the de-identification of school district names to illustrate the strengths and weaknesses of each method mentioned above. When one method failed to detect the school district name in a statement, another was able to identify it. Combining these methods ensured the removal of the school district names from all mission statements.

School district name matching

One way to identify school district names in the text was to match them against outside data sources. In this case, we relied on the same two sources we used to build our list of school districts in the first place: the Common Core of Data from the National Center for Education Statistics (NCES) and the American Community Survey (ACS) from the U.S. Census Bureau. Both datasets have detailed information about school districts, including their official names.

However, the mission statements we found sometimes used different names from those listed in the NCES or ACS datasets. For example, instead of saying “Marvelous School District,” the mission statement might say “MSD.” This led us to look for tools that could identify school district names without knowing and exactly matching their actual names as listed.

Named Entity Recognition

Named Entity Recognition (NER) is an approach that identifies words or phrases in text that represent named entities such as people (e.g., “John Doe”), organizations (e.g., “Pew Research Center”) or locations (e.g., “Washington, D.C.”). NER systems often utilize large language models trained to understand human language and detect named entities. While the diversity and size of the text used to train these models allow them to capture general patterns in text, the models might not always be able to capture domain-specific content. Training a model further with domain-specific data can help improve its accuracy, but it takes time and effort.

For this project, we used the Hugging Face Transformers library, which includes a variety of pretrained models for conducting NER tasks. The pretrained models available in this library performed well, but not perfectly, for our purposes. In a handful of cases, the models failed to detect the school district as an entity from the text. In others, they recorded words like “mission” or “community” as locations or organizations, even when neither word was part of the school district name.

One way we could have addressed this challenge is to fine-tune a model – that is, take a pretrained model and further train it on a smaller, domain-specific dataset. This would have allowed us to capture the knowledge of the pretrained model but adapt it for the nuances in the school mission statements. But that would have required experimenting until we sufficiently improved the model’s performance. Instead of spending our effort on fine-tuning, we explored one more method that we hoped could identify the school district names that were not captured by the NER model.

Regular expressions

As a third step, we tried a tool called regular expressions to identify patterns in school district names. Similar to the district name matching step described above, regular expressions can be used to find exact matches. However, they can also handle queries for more complex patterns in the text, such as searching for words that come before a known pattern, literal character matching (like the search function on a text editor), matching whole classes of characters (for instance, “find all the numbers” or “find all the words that start with an uppercase letter”), or a combination of all these things.

In our case, we used regular expressions to identify all capitalized words that came before either the word “school” or “district” in our mission statements. However, those words are not always part of the name of the district. Consider this example:

MISSION Marvelous Schools’ students, faculty, and staff believe that education is a partnership among schools, families, and communities.

Here, our query would return a false positive – “MISSION Marvelous” instead of “Marvelous.” Luckily, these false positives were easy to spot and address by removing words like “community,” “mission,” “vision,” “statement” or “goal(s)” from our search queries; we also removed common words with little semantic significance such as “of” and “the,” which are known as “stop words.”

Conclusions

Researchers often need to redact identifiable information from text before releasing their datasets publicly. For example, interviews and transcripts may contain personal stories, anecdotes or other identifiable information, and redacting such information help ensure anonymity.

So why use computational methods instead of doing this manually?

In many documents, removing sensitive information manually not only can be a slow process; it also can be prone to errors, especially if the documents are long and include complex formatting. Using computational methods are more efficient and reliable when you have the appropriate tools and techniques. However, depending on the sensitivity of the documents, it’s still an option to use automated redaction tools followed by a manual review. This can help ensure accuracy while saving time.

In our case, each method we explored performed well to some extent, but none was perfect. So we decided to combine all three approaches. For each school district mission statement, we followed these steps:

- First, we identified exact matches to the district name as listed in the NCES and ACS datasets.

- Then, we used NER to identify words and phrases referring to organization names.

- Next, we used regular expressions to identify capitalized words that preceded the word “school” or “district.”

- Because NER and regular expressions returned false positives in some cases, we removed certain words from the identified list of words. These included “community,” “mission,” “vision,” “statement” and “goal.”

- We redacted the remaining list of words from the statements.

After doing a final manual review to ensure all mission statements were properly redacted, we released our dataset publicly.