Survey researchers often ask respondents about things they may have done in the past: Have they attended a religious service in the past year? When was the last time they used cash to pay for something? Did they vote in the most recent presidential election? But questions like these can put respondents’ memories to the test.

In many instances, we have no option but to ask these questions directly and rely on strategies to help with respondents’ recollection. But increasingly, we’re able to find out exactly what someone did instead of asking them to remember it. In the case of social media, for example, we can ask respondents how often they post on a site like Twitter. But we can also simply look up the answer if they have given us permission to do so.

Since space in a survey questionnaire is a precious resource, we generally don’t like to ask respondents about things we can easily find ourselves. But there are times when it’s useful to compare what respondents say in a survey with what we can discover by looking at their online activity. Having both pieces of information – what people say they did and what they actually did – can help us better understand things like respondent recall or how respondents and researchers think about and define the same activities.

This post explores the connection between Americans’ survey responses and their digital activity using data from our past research about Twitter. First, we’ll look at how well U.S. adults on Twitter can remember the number of accounts they follow (or that follow them) on the platform. Then we’ll compare survey responses and behavioral measures related to whether respondents post about politics on Twitter. This, in turn, can help us learn more about how respondents and researchers interpret and operationalize the concept of “political content.”

All findings in this analysis are based on a May 2021 survey of U.S. adults who use Twitter and agreed to share their Twitter handle with us for research purposes.

Followings and followers

In our May 2021 survey, we asked Twitter users to tell us how many accounts they follow on the site, as well as how many accounts follow them. We did not expect that they would know these figures off the top of their heads, and worried that asking for a precise number could prompt respondents to go to their Twitter accounts and look up the answers. So, we gave them response options in the form of ranges: fewer than 20 accounts, from 20 to 99, from 100 to 499, from 500 to 999, from 1,000 to 4,999 and more than 5,000 accounts. (We accidentally excluded the number 5,000 from the ranges listed in our survey instrument. Luckily for us, none of our respondents had exactly 5,000 followers, so this oversight did not come into play in any of the calculations that follow.)

After asking these questions, we used the Twitter API to look up each respondent’s profile on the site. We then performed a fairly simple calculation to see if the range each respondent chose in the survey was accurate to the number of followed accounts we found on their profile.

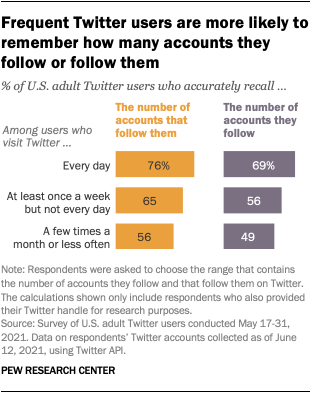

More than half of Twitter users who provided their handle (59%) chose a range that accurately reflected the number of accounts they follow. And two-thirds (67%) were able to accurately recall the number of accounts that follow theirs. So it seems that Twitter users have slightly more awareness of their own reach on the platform than of the number of accounts they’ve chosen to follow.

We also found that users were better at answering this question the more often they use Twitter. For instance, 69% of those who said they use Twitter every day were able to accurately report the range of accounts they follow. But that share fell to 49% among those who said they used it a few times a month or less often.

Frequency of political tweeting

Of course, asking people how many accounts they follow on Twitter is a concrete question with a definitive answer. Many other questions are more abstract.

Consider our 2022 study about politics on Twitter, in which we wanted to measure how often Twitter users talk about politics on the site based on the content of their tweets. Most people can probably agree that “talking about politics” includes someone expressing explicit support for a candidate for office or commenting on a new bill being debated in Congress. But what about someone expressing an opinion about a social movement or sharing an opinion piece about tax rates or inflation? Defining which tweets to count as “political” is not easy.

In earlier research on political tweeting, we used a fairly narrow definition that defined “political tweets” as those mentioning common political actors or a formal political behavior like voting. This definition makes it easy to identify relevant tweets, but it also leaves out many valuable forms of political speech. In our more recent study, we wanted to use a broader definition that was more in line with what people understand as political content. For this, we fine-tuned a machine learning model that was pretrained on a large corpus of English text data, and we validated it using 1,082 tweets hand-labelled by three annotators who were given a general prompt to identify any containing “political content.” (The complete details can be found in the methodology of the 2022 report.)

With that automatic classification of all our respondents’ tweets in hand, we wanted to know whether we were capturing tweets that they themselves would classify as political content. So we included a question in our survey asking respondents if they had tweeted or retweeted about a political or social issue in the last year; 45% said that they had done this. We then compared their responses to our classification of their tweets.

As it turns out, a large majority (78%) of those who said that they had tweeted about politics in the last year had posted at least one tweet classified as political content by our machine learning model. And 67% of these users had posted at least five such tweets in that time. This tells us that the definition operationalized in our machine learning model was catching a large percentage of the respondents who said that they engaged in the behavior we were studying.

However, this is only part of the story. When we looked at people who said they didn’t tweet or retweet about politics in the last year, we found that 45% of them did, in fact, post at least one tweet in the last year that our model classified as political – and 24% of them had posted five or more such tweets during that span. These results were similar if we changed the time horizon, using a separate question asking if users had ever tweeted or retweeted about political or social issues or if they had done so in the last 30 days.

In other words, either our definition of political content was more encompassing than the one used by some of our survey respondents, or some respondents simply didn’t recall that they had posted political content.

Conclusions

This exercise allows us to see how well U.S. Twitter users’ survey responses match up with their observed behaviors on the site. Sometimes the two measures align fairly well, as in the case of follower counts. In other situations, however, making a comparison is harder. Some questions, such as whether tweets are political or not, depend on the judgment of the respondents, and our operational definition may be different from theirs. That discrepancy is not always avoidable. Using a single definition to measure Twitter behavior among a large group of respondents means that some respondents may not recognize certain behaviors the same way we do.