Social scientists are increasingly adopting machine learning methods to analyze large amounts of text, images and other kinds of data. These methods, which include different types of deep learning models, allow researchers to measure important concepts and model human behavior and information flows in innovative ways. However, a lack of established best practices in this fast-changing field can make these methods difficult to implement. This post dives into Pew Research Center’s recent experience adopting a deep learning model for a project that studied gender representation in Google Images. It also describes how we used a technique called “transfer learning” to make our classification project much more manageable.

Background

The gender representation report research team was comprised of researchers with social science backgrounds, including economist Onyi Lam and political scientists Adam Hughes and Stefan Wojcik. Before this project, we’d used machine learning to conduct text analysis research, often relying on the Python library scikit-learn. We had almost no prior experience with deep learning. But we were interested in expanding our research to examine images since images represent a large component of online information.

We decided to explore how online image searches represent men and women in the workplace. To answer this question, we obtained a large quantity of images from Google search results for various jobs and then classified individuals who appeared in those images as male or female.

This particular project was ideally suited to machine learning because of its scope. What requires hours of manual human labor can be automated and completed in a few minutes with machine learning. And since machine learning models can scale to very large datasets, we were able to significantly expand our project’s scope. In addition to the more than 10,000 images we collected from English language Google searches in the U.S., we included over 40,000 images from searches using 17 other country-language combinations as well.

How transfer learning works

A deep learning model is composed of layers that represent different information about an object. Generally speaking, deeper neural networks with more layers are able to tackle more complicated tasks. However, this increased performance comes at a cost, since deeper neural networks need more data and time to learn a task. As a consequence, researchers training a deep learning model often need to assemble a very large set of training data and specialized computing devices to reduce time demands.

The team collected an initial dataset for training that contained over 10,000 faces and included human-coded labels for gender, race, and age, among other attributes. While that number may sound like a lot, a common dataset used for identifying objects in images called ImageNet contains millions of images.

With the limited amount of data available, the team worried that training a neural network from scratch was an insurmountable task. After doing more research on neural networks, we decided to adopt an approach called transfer learning, inspired by tutorials provided by fast.ai, a website dedicated to machine learning education.

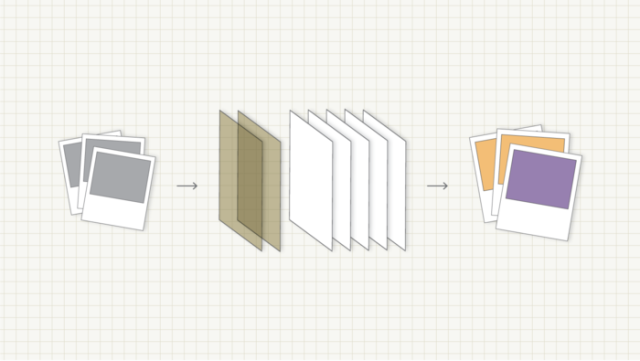

The core idea underlying transfer learning is that researchers can “recycle” the knowledge gained from a large, pre-trained neural network when applying it for a new task. While neural networks are often composed of many different “layers,” the lower layers of the network often contain low-level abstract information about objects in images such as lines and curves, which is useful across many image classification tasks.

Transfer learning essentially cuts the lower layers out of a pre-trained neural network and pastes them onto new, untrained layers. From there, researchers can train the neural network on a new task, — even if the new task is different from the task the pre-trained neural network was taught to do. In our case, we trained the system to recognize gender patterns, but the pretrained model was originally trained to detect over 1000 objects like banjos, birds and balloons. Many organizations provide access to pre-trained neural networks that identify objects in photos free of charge, so gaining access to an array of pre-trained neural networks was not a barrier.

Transfer learning still requires some training data, such as the particular classification decisions researchers want to estimate, but it supplements that data by relying on the collective wisdom of “pre-trained” models. The main insight is that by attaching additional layers to the backbone of a pre-trained model, the model does not need to learn low-level features and can jump right into learning our specific classification task. This makes kick-starting a new project or testing an idea much faster than if one were to train a model entirely from scratch.

In our initial attempt, we used the pre-trained ResNet-50 model from the Python library `Keras` and achieved 90% accuracy. The result was a pleasant surprise and we quickly realized the promise of this approach: it would allow us to answer our original research question with acceptable levels of predictive error. Similar deep learning libraries include Pytorch, Fastai and Tensorflow, which are often available in both Python and R.

The basic architecture of the transfer learning implementation, using `Keras`, appears below, where the `base_model` is the Resnet-50 pretrained model. In Keras, some of the available pre-trained models include ResNet, VGG and Inception, among others.

# get output from the pre-trained model

x = base_model.output

# stack additional layers on top for fine-tuning

x = GlobalAveragePooling2D()(x)

x = Dense(512, activation=’relu’)(x)

predictions = Dense(1, activation=’sigmoid’)(x)

model = Model(inputs=base_model.input, outputs=predictions)There are still a lot of parameters in the models that need to be optimized, and different choices will yield better results depending on the application. So even with transfer learning, the best modeling choices are not immediately clear, and researchers should test different model parameters.

However, the basic idea of using pretrained models as a backbone can allow researchers to quickly decide whether the approach is worth the time. If your transfer learning model does not yield a reasonable level of performance, it might be time to think about re-collecting training data or reexamining the research design altogether.

For social scientists who are interested in more than just training a model — those who want to answer a specific research question — transfer learning offers a big advantage over training a model from scratch. Transfer learning makes it possible to get a sense of whether an idea is feasible in a matter of hours, rather than the days or weeks it might take to train a model from scratch. And since social scientists are often more interested in making aggregate estimates of populations rather than making production-ready image classifiers, they are unlikely to require true state-of-the-art performance.

Evaluating and fine tuning the model

To evaluate the model performance, we employed both cross-validation and comparisons of model predictions with human decisions. Cross-validation involves holding back part of the labeled data from training, and human validation involves having humans label a small set of data that the model made predictions on. (We used the Mechanical Turk crowdsourcing service to help us label thousands of faces, and three mTurkers examined each image.) We then tested the model on those labeled data and compared the model predictions with the true values.

Social scientists are often interested in making subgroup comparisons across different socio-demographic lines. One of the key criteria we imposed on the model performance was to make sure that the accuracy of the prediction had to be broadly consistent across demographic groups. Since we wanted to deploy the model to analyze a diverse set of faces, we did not want the accuracy of the result to be biased against a certain group and lead to incorrect inferences when we made cross-group comparisons.

In the first few versions of the model we noticed that its performance was noticeably worse on women’s faces when compared to men. Upon reviewing some incorrectly classified images, we noticed that the model did worse with images of darker-skin females (this issue is similar to one raised by researcher MIT Joy Buolamwini and her co-authors, who showed that off-the-shelf, commercial face recognition suffers from lower accuracy on darker- skin females), as well as those of individuals wearing glasses, which suggested over-fitting of models. Over-fitting is a common problem in machine learning research and is defined as when a model learns quirks from the data instead of broader patterns associated with the task.

To address this bias, we collected an additional set of images that included specific minority groups and also ensured that certain non-gender-related facial features, such as glasses, should not be a predictive feature of the model. To do that, we queried a list of minority individuals from Google Image Search and saved the returned images as separate training datasets. Another strategy we employed to increase the diversity of our training images was to query Google Image with specific search terms that had the following format: “country” + “men/women” such as “Argentinian men” and “Iraqi women” for 100 of countries. We downloaded up to 100 images for each query and collected more than 20,000.

The final version of the model was able to achieve 95% cross-validation accuracy and 88% human-validation accuracy. Upon reviewing the misclassified images in the human-validation set, we noticed that many of them were of noticeably lower quality — the faces were often blurred or obstructed. Since our training dataset did not contain any children’s faces, the model also did worse when it comes to making predictions about children.

Parting thoughts

Deep learning is an exciting and fast-changing field with new methods emerging regularly. It might seem like a daunting task for social scientists to adopt these new tools. However, using transfer learning can lower the costs associated with some of these methods, and social scientists can use them effectively. And the same fundamental approach described in this post can be applied to different types of media, including text and audio data.

The process of building a neural network for this project highlighted some of the challenges for computational social scientists applying deep learning to research. We needed to understand how the model treated people from different demographic backgrounds in order to avoid bias in our estimates. We also needed to establish ways to test for systematic bias in the model. Every social scientist will confront their own challenges in applying these kinds of models, but deep learning tools will be useful for many researchers interested in image, audio and text data.