The trend analysis in the report relies on data from multiple Pew Research Center surveys that have been combined, by year, to minimize the sampling error. The combined surveys were national surveys of U.S. adults conducted using live telephone interviewing and random-digit-dial (RDD) sampling. Surveys conducted before 2007 featured landline RDD samples. Surveys conducted in 2007 and on were generally conducted using landline and cellphone RDD samples.

Other analysis in this report is based on a national landline and cellphone survey of U.S. adults living in all 50 U.S. states and the District of Columbia conducted Aug.23 to Sept. 2, 2016. That study was conducted by Abt SRBI and featured 2,802 total interviews (702 on landlines and 2,100 on cellphones). Both samples were provided by Survey Sampling International. Respondents in the landline sample were selected by randomly asking for the youngest adult male or female who is now at home. Interviews in the cell sample were conducted with the person who answered the phone, if that person was an adult 18 years of age or older. All cellphone numbers were manually dialed. The voter file analysis (presented in the last section) was based on the cellphone sample from this study.

Benchmarking analysis used a subset of this study, which featured Pew Research Center’s standard recruitment protocol for national dual frame RDD surveys. That protocol features a seven-call design to landlines and cellphones, English and Spanish administration, an approximately 20-minute interview and no advance mailing. This component features 301 landline interviews and 900 cellphone interviews and was weighted according to Pew Research Center’s standard protocol. The combined landline and cellphone samples are weighted using an iterative technique that matches gender, age, education, race, Hispanic origin and nativity and region to parameters from the 2014 Census Bureau’s American Community Survey and population density to parameters from the Decennial Census. The sample is also weighted to match current patterns of telephone status (landline only, cellphone only, or both landline and cellphone), based on extrapolations from the 2015 National Health Interview Survey. The weighting procedure also accounts for the fact that respondents with both landline and cellphones have a greater probability of being included in the combined sample and adjusts for household size among respondents with a landline phone. The margins of error reported and statistical tests of significance are adjusted to account for the survey’s design effect, a measure of how much efficiency is lost from the weighting procedures. For detailed information about our survey methodology, see https://www.pewresearch.org/methodology/u-s-survey-research/. For information on the 2012 study’s methodology see https://www.pewresearch.org/politics/2012/05/15/assessing-the-representativeness-of-public-opinion-surveys/

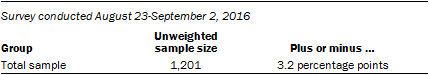

The following table shows the unweighted sample sizes and the error attributable to sampling that would be expected at the 95% level of confidence for different groups in the survey:

Sample sizes and sampling errors for other subgroups are available upon request.

In addition to sampling error, one should bear in mind that question wording and practical difficulties in conducting surveys can introduce error or bias into the findings of opinion polls.

Details about the computation of a benchmark for voter registration

There is no rigorous and widely accepted measure of the share of Americans who are registered to vote. Voter registration, like voting itself, is believed to be overreported in surveys. Official voter registration records are known to greatly overstate the number of active registrants, due to failures on the part of election officials to eliminate duplication and keep the registration lists current. Commercial voter files attempt to capture registration while using techniques to minimize duplication and deadwood, but – since most of these lists begin with registration records – are also known to miss many Americans who are not registered to vote.

The most widely-used official measure is derived from the post-election voter supplement conducted every two years after national elections as part of the Current Population Survey. That survey interviews a large sample of people who have been empaneled and asks those who are eligible to vote if they did so in the recent election. Those who report having voted are also coded as being registered. Those who report not voting are asked if they are registered. However, there are several problems with the CPS measure of turnout and registration. First, individuals who do not respond to the supplement are treated as having not voted. Although this cancels out much of the bias due to overreporting of voting, it introduces error into year-to-year trends by confounding changes in nonresponse with changes in actual voter turnout.12When nonrespondents are excluded, overreporting means that estimated turnout greatly exceeds the number of votes that were actually cast in the election. It follows that estimated registration rates are likely too high as well.

Aram Hur and Christopher Achen proposed a way to reweight the CPS data to bring survey estimates of the total number of votes cast into line with the actual vote totals in each state, thus reducing the response bias without making the assumption that all nonrespondents are nonvoters. The adjustment first removes nonrespondents and ineligible voters (mostly noncitizens) from the data. Next, the weights for eligible voters are post-stratified so that the state level turnout estimates from the CPS match the state-level turnout rates calculated by the U.S. Elections Project. This eliminates error associated with treating nonrespondents as nonvoters and corrects for overreporting among the voting eligible population.

To produce a national estimate of the registration rate among all adults in the U.S., we applied the Hur-Achen adjustment to eligible voters in each CPS voting supplement from 1996 to 2014. Because the target population for this study includes all adults regardless of their eligibility to vote, CPS respondents who were not eligible to vote are retained without modification. To account for the fact that nonrespondents were removed, the weights for eligible voters were rescaled so that their share of the total population matches the share prior to the exclusion of nonrespondents.

Pew Research Center is a nonprofit, tax-exempt 501(c)(3) organization and a subsidiary of The Pew Charitable Trusts, its primary funder.

© Pew Research Center, 2017